Details

-

Bug

-

Resolution: Unresolved

-

Major

-

None

-

3.0.0-beta2

-

None

-

1

Description

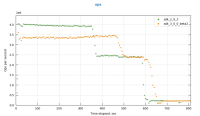

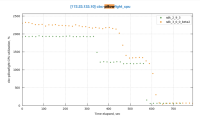

We are seeing around a 40% decrease in throughput after upgrading cbc pillowfight from 2.10.0 to 2.10.4

Test:

Max ops/sec, cbc-pillowfight, 2 nodes, 80/20 R/W, 512B JSON items, 1K batch size

http://showfast.sc.couchbase.com/#/timeline/Linux/kv/max_ops/all

Build: 6.5.0-3939

With 2.10.0: http://perf.jenkins.couchbase.com/job/ares/10240/

https://s3-us-west-2.amazonaws.com/perf-artifacts/jenkins-ares-10240/172.23.133.11.zip

https://s3-us-west-2.amazonaws.com/perf-artifacts/jenkins-ares-10240/172.23.133.12.zip

https://s3-us-west-2.amazonaws.com/perf-artifacts/jenkins-ares-10240/172.23.133.13.zip

https://s3-us-west-2.amazonaws.com/perf-artifacts/jenkins-ares-10240/172.23.133.14.zip

With 2.20.4: http://perf.jenkins.couchbase.com/job/ares/10324/

https://s3-us-west-2.amazonaws.com/perf-artifacts/jenkins-ares-10324/172.23.133.11.zip

https://s3-us-west-2.amazonaws.com/perf-artifacts/jenkins-ares-10324/172.23.133.12.zip

https://s3-us-west-2.amazonaws.com/perf-artifacts/jenkins-ares-10324/172.23.133.13.zip

https://s3-us-west-2.amazonaws.com/perf-artifacts/jenkins-ares-10324/172.23.133.14.zip

Analysis:

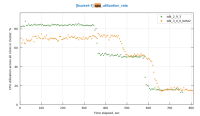

From the graphs we can see that 2.10.0 has initially higher throughput, then drops down to equal throughput with 2.10.4. 2.10.0 also has high cpu utilization correlating to the higher ops. 2.10.0 also has a larger replica dcp queue and disk write queue which may indicate that 2.10.4 is handling sync rep in a certain way that might be causing the drop. We are only seeing this issue on this single test. Other tests that do not have this issue are:

Max ops/sec, cbc-pillowfight, 2 nodes, 50/50 R/W, 512B JSON items, 1K batch size

Max ops/sec, cbc-pillowfight, 2 nodes, 80/20 R/W, 512B JSON items, 1K batch size, 10 vCPU

Max ops/sec, cbc-pillowfight, 2 nodes, 20/80 R/W, 512B JSON items, 1K batch size

There are more, but just wanted to highlight that this is only happening in the 80/20 test with no encryption and full set of cpus.