Details

-

Bug

-

Resolution: Fixed

-

Critical

-

5.5.0

-

Cluster: hebe_kv

OS: CentOS 7

CPU: E5-2680 v3 (48 vCPU)

Memory: 64GB

Disk: Samsung Pro 850

-

Untriaged

-

Yes

Description

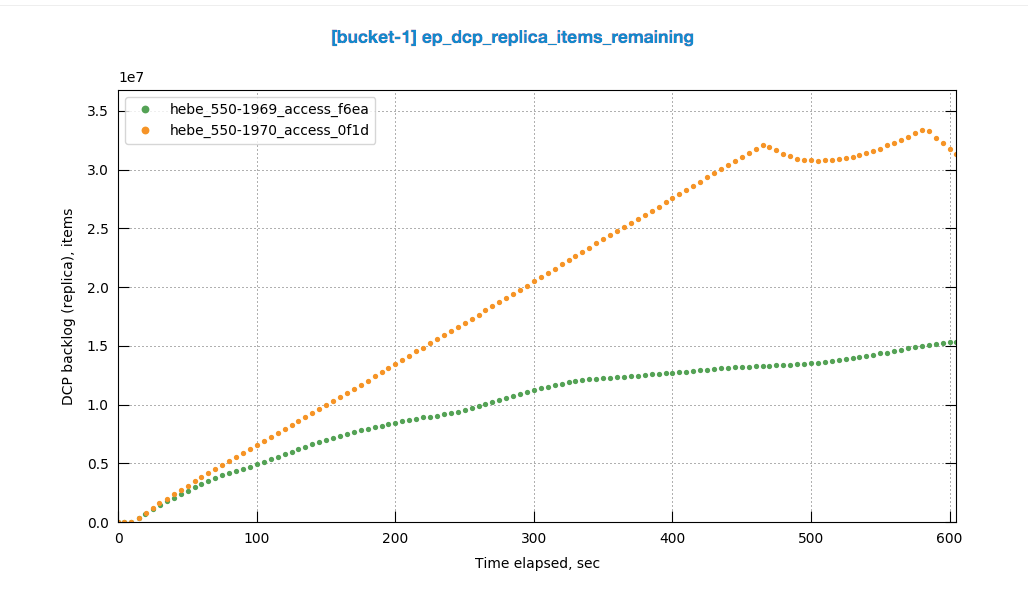

Test env and scenario:

3 nodes, 1 replica

20M items in the bucket, 1M ops/sec (50/50 R/W) ongoing

Despite similar replication rate the replication queue on 5.5.0-1970 grows much faster

causing overall performance degradation due to low-mem scenarios like DGM.

Changes in 5.5.0-1970:

[+] 4fa4905 -------MB-26021

https://github.com/couchbase/kv_engine/commit/4fa490526120424e82227b431ec0bb84b487ed37

[+] 90c76d4 -------MB-26021

https://github.com/couchbase/kv_engine/commit/90c76d4f0d99ef68ff5adb2fb667a4e20383a728

Servers logs:

https://s3-us-west-2.amazonaws.com/perf-artifacts/jenkins-hebe-tmp-32/172.23.100.204.zip

https://s3-us-west-2.amazonaws.com/perf-artifacts/jenkins-hebe-tmp-32/172.23.100.205.zip

https://s3-us-west-2.amazonaws.com/perf-artifacts/jenkins-hebe-tmp-32/172.23.100.206.zip

5.5.0-1969 versus 5.5.0-1970, replication queue:

Also, similar comparison but using pillowfight tests results:

Logs form 2-node pillowfight test:

https://s3-us-west-2.amazonaws.com/perf-artifacts/jenkins-ares-7547/172.23.133.13.zip

https://s3-us-west-2.amazonaws.com/perf-artifacts/jenkins-ares-7547/172.23.133.14.zip