Details

-

Bug

-

Resolution: Fixed

-

Blocker

-

5.5.0

-

5.5.0-2388

-

Untriaged

-

Centos 64-bit

-

-

Unknown

Description

See the following mail thread for the discussion that is happening so that we have a context of why this bug was failed. Meanwhile I will also have few more runs with cbcollect logs before the cluster being dismantled as requested by Abhishek Singh .

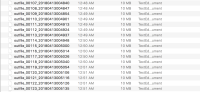

I have attached the existing cbcollect_info in the logs section.

From: Brett Lawson <>

|

Date: Friday, April 6, 2018 at 2:20 PM

|

To: Abhishek Singh <>, Sriram Melkote <>, Matt Ingenthron <>, Dave Finlay <>

|

Cc: Balakumaran Gopal <>

|

Subject: Re: MB-28968 - System RBAC causing intermittent rebalance hangs

|

|

|

Hey Abhishek,

|

|

|

I would suggest looking at [2] instead as it has slightly more information and comes from the latest documentation, but it is effectively the same thing.

|

|

====

|

|

As I mentioned above, NO_ACCESS is simply a notification that you do not have access to a specific resource (whether that be a specific bucket, document, operation, etc..). According to the spec, it would be a bug if libcouchbase is throwing away connections based on that status (though that would explain how it resolves the connection issue so quickly). I’ll speak with Sergey, our libcouchbase maintainer in the morning and see if that might be the reason for our differences. Additionally, I’ll try to think up some potential fixes overnight and hopefully we can get a Wireshark capture that concretely points at this being the cause.

|

|

|

Cheers, Brett

|

|

|

[2] https://developer.couchbase.com/documentation/server/5.1/sdk/go/collecting-information-and-logging.html

|

|

From: Abhishek Singh <>

|

Date: Friday, April 6, 2018 at 1:39 AM

|

To: Brett Lawson <>, Sriram Melkote <>, Matt Ingenthron <>, Dave Finlay <>

|

Cc: Balakumaran Gopal <>

|

Subject: Re: MB-28968 - System RBAC causing intermittent rebalance hangs

|

|

Hi Brett,

|

|

> It may also be helpful to enable gocb/gocbcore logging if you have not already.

|

|

In Eventing master builds, we haven’t done anything specific change w.r.t. gocb logging. We simply use it as a package with all default setup configs. I suspect based on messages within Eventing log files, gocb/gocbcore related log messages are absent. Please share how to enable the logging for gocb/gocbcore to capture debugging info from SDK side or is it ok to just follow step mentioned on [1]?

|

|

====

|

|

Just based on Eventing stats dump, it seems like this problem isn’t manifested by LCB. With LCB, I think it internally keeps on retrying until it gets a valid rbac auth to perform bucket/n1ql op – so even if cbauth provides stale creds or Eventing creds cached is stale, it would hit script timeout. Script timeout is execution time threshold that Eventing enforces on JS code execution, in this case stats from V8 sandbox (which uses LCB) doesn’t report non-zero “timeout_count”.

|

|

2018-04-04T02:16:14.598-07:00 [Info] eventing-consumer [worker_Function_900706978_test_kv_rebalance_in_when_existing_eventing_node_is_processing_mutation_7:33998:9666] v8worker failure stats : {"bucket_op_exception_count":0, "n1ql_op_exception_count":0, "timeout_count":0, "checkpoint_failure_count":0,"dcp_events_lost": 0,"v8worker_events_lost": 0,"app_worker_setting_events_lost": 0,"timer_events_lost": 0,"debugger_events_lost": 0,"mutation_events_lost": 0,"delete_events_lost": 0,"cron_timer_events_lost": 0,"doc_timer_events_lost": 0,"timestamp" : "2018-04-04T09:16:14Z"}

|

|

Also, there is bit of difference seen w.r.t. period rbac creds are requested by LCB vs GoCB(Note: KV rebalance has finished before 2018-04-04T02:18:02.975-07:00):

|

|

LCB

|

431 2018-04-04T02:15

|

272 2018-04-04T02:16

|

|

GoCB

|

51 2018-04-04T02:15

|

89 2018-04-04T02:16

|

48 2018-04-04T02:17

|

5 2018-04-04T02:23

|

|

[1] https://developer.couchbase.com/documentation/server/4.0/sdks/go-beta/logging.html

|

|

|

Thanks,

|

Abhishek

|

|

|

From: Brett Lawson <>

|

Date: Friday, April 6, 2018 at 1:27 PM

|

To: Sriram Melkote <>, Abhishek Singh <>, Matt Ingenthron <>, Dave Finlay <>

|

Cc: Balakumaran Gopal <>

|

Subject: Re: MB-28968 - System RBAC causing intermittent rebalance hangs

|

|

Hey Siri,

|

|

|

Any chance we could gather together a Wireshark capture and eventing log (as below) that can be vaguely correlated in time so that I can investigate what the SDK is doing and seeing when this starts to occur? This is probably the quickest path to identifying the specific cause for the SDKs inability to perform operations. It may also be helpful to enable gocb/gocbcore logging if you have not already.

|

|

A possible avenue to investigate here in the packet trace: NO_ACCESS is not able to be a ‘connection fatal’ event due to it being seen for various valid RBAC instances; I’m not certain about the architecture of cbauth and credentials passing, but we’ve previous had issues related to gocb reacting to rebalances very quickly, even before the appropriate permissions had been propagated (this came up in MB-26144 or one of its spin-offs). It’s possible we are seeing that again here, and the SDK doesn’t have enough context to know it needs to attempt to establish the connection again for up to date permissions. You can check if this is happening by investigating that the SDK is correctly performing SASL_AUTH and SELECT_BUCKET, and then watching to see if the SDK is getting NO_ACCESS all the way until it creates a new connection (which it will occasionally do during cluster map change notifications).

|

|

|

Cheers, Brett

|

|

From: Sriram Melkote <>

|

Date: Friday, April 6, 2018 at 12:32 AM

|

To: Abhishek Singh <>, Matt Ingenthron <>, Brett Lawson <>, Dave Finlay <>

|

Cc: Balakumaran Gopal <>

|

Subject: Re: MB-28968 - System RBAC causing intermittent rebalance hangs

|

|

Hi Brett/Dave,

|

|

Bala is filing a bug with cbcollects. But I'm emailing to get this thread started as it appears in several bugs.

|

|

My understanding is:

|

1. It is responsibility of gocb to refresh the node maps when rebalance occurs

|

2. And it is ns_server's responsibility to provide us with working credentials to access a remote node

|

|

Both these being fairly old code, I'd be surprised if it has a bug. But I'm puzzled what else can lead to what we're seeing of access being denied for SET/GET operations for extended durations (6 minutes) below?

|

|

Thanks,

|

Siri

|

|

|

From: Abhishek Singh <>

|

Date: Thursday, April 5, 2018 at 9:36 PM

|

To: Sriram Melkote <>

|

Cc: eventing <>

|

Subject: MB-28968 - System RBAC causing intermittent rebalance hangs

|

|

Hi Siri,

|

|

QE test case that happen to replicate the issue (but it's intermittent):

|

|

./testrunner -i /tmp/testexec.17209.ini -p get-cbcollect-info=True,GROUP=bucket_op_with_timers -t eventing.eventing_rebalance.EventingRebalance.test_kv_rebalance_in_when_existing_eventing_node_is_processing_mutations,nodes_init=6,services_init=kv-kv-eventing-eventing-eventing-index:n1ql,dataset=default,groups=simple,reset_services=True,doc-per-day=10,sock_batch_size=8,worker_count=8,cpp_worker_thread_count=8,handler_code=bucket_op_with_timers,GROUP=bucket_op_with_timers

|

|

Relevant cbcollect(log snippets from it referenced below): https://s3.amazonaws.com/bugdb/jira/MB-28968/test_11.zip

|

Another cbcollect where same problem manifested: https://s3.amazonaws.com/bugdb/jira/MB-28968/test_16.zip

|

|

Cluster had 3 eventing nodes [172.23.109.71:8096 172.23.109.70:8096 172.23.109.69:8096] and in the test a new KV node is rebalanced in

|

|

Eventing Node 172.23.109.70:8096 receives notif from ns_server for rebalance start and 172.23.109.70:8096 notifies other Eventing nodes in the cluster about it. Snippet from node 170 showing Eventing rebalance started at 2018-04-04T02:18:02.975-07:00

|

|

2018-04-04T02:18:02.975-07:00 [Info] ServiceMgr::StartTopologyChange change: service.TopologyChange{ID:"13d3d8939120d769b51395838c7d4de5", CurrentTopologyRev:service.Revision(nil), Type:"topology-change-rebalance", KeepNodes:[]struct { NodeInfo service.NodeInfo "json:\"nodeInfo\""; RecoveryType service.RecoveryType "json:\"recoveryType\"" }{struct { NodeInfo service.NodeInfo "json:\"nodeInfo\""; RecoveryType service.RecoveryType "json:\"recoveryType\"" }{NodeInfo:service.NodeInfo{NodeID:"da8e09e209d20b328d6667e85eb27af9", Priority:0, Opaque:interface {}(nil)}, RecoveryType:"recovery-full"}, struct { NodeInfo service.NodeInfo "json:\"nodeInfo\""; RecoveryType service.RecoveryType "json:\"recoveryType\"" }{NodeInfo:service.NodeInfo{NodeID:"bbdd26f7126754137fc4955609f0dd86", Priority:0, Opaque:interface {}(nil)}, RecoveryType:"recovery-full"}, struct { NodeInfo service.NodeInfo "json:\"nodeInfo\""; RecoveryType service.RecoveryType "json:\"recoveryType\"" }{NodeInfo:service.NodeInfo{NodeID:"971ab380217bab731ddbdf226a0de842", Priority:0, Opaque:interface {}(nil)}, RecoveryType:"recovery-full"}}, EjectNodes:[]service.NodeInfo{}}

|

2018-04-04T02:18:03.101-07:00 [Info] ServiceMgr::StartTopologyChange Active Eventing nodes in the cluster: [172.23.109.71:8096 172.23.109.70:8096 172.23.109.69:8096]

|

2018-04-04T02:18:03.101-07:00 [Info] ServiceMgr::StartTopologyChange Querying nodes: [172.23.109.71:8096 172.23.109.70:8096 172.23.109.69:8096] for bootstrap status

|

2018-04-04T02:18:03.110-07:00 [Info] ServiceMgr::StartTopologyChange Status of app bootstrap across all Eventing nodes: false

|

2018-04-04T02:18:03.132-07:00 [Info] ServiceMgr::StartTopologyChange Starting up rebalancer

|

|

All 6 workers on node 172.23.109.69 got the notification that rebalance has started and start with this work of vbucket migration as per the plan

|

|

1227010:2018-04-04T02:18:08.491-07:00 [Info] Consumer::controlRoutine [worker_Function_900706978_test_kv_rebalance_in_when_existing_eventing_node_is_processing_mutation_2:43729:9602] Got notification that cluster state has changed

|

1227013:2018-04-04T02:18:08.493-07:00 [Info] Consumer::controlRoutine [worker_Function_900706978_test_kv_rebalance_in_when_existing_eventing_node_is_processing_mutation_6:36338:9651] Got notification that cluster state has changed

|

1227016:2018-04-04T02:18:08.495-07:00 [Info] Consumer::controlRoutine [worker_Function_900706978_test_kv_rebalance_in_when_existing_eventing_node_is_processing_mutation_0:35565:9595] Got notification that cluster state has changed

|

1227019:2018-04-04T02:18:08.498-07:00 [Info] Consumer::controlRoutine [worker_Function_900706978_test_kv_rebalance_in_when_existing_eventing_node_is_processing_mutation_1:34750:9621] Got notification that cluster state has changed

|

1227021:2018-04-04T02:18:08.499-07:00 [Info] Consumer::controlRoutine [worker_Function_900706978_test_kv_rebalance_in_when_existing_eventing_node_is_processing_mutation_3:41311:9612] Got notification that cluster state has changed

|

1237730:2018-04-04T02:18:23.398-07:00 [Info] Consumer::controlRoutine [worker_Function_900706978_test_kv_rebalance_in_when_existing_eventing_node_is_processing_mutation_4:42526:9637] Got notification that cluster state has changed

|

1238065:2018-04-04T02:18:24.158-07:00 [Info] Consumer::controlRoutine [worker_Function_900706978_test_kv_rebalance_in_when_existing_eventing_node_is_processing_mutation_5:33579:9633] Got notification that cluster state has changed

|

|

As ns_server rebalances KV + Views first before informing any MDS role about rebalance, in this case too it can be seen that Eventing started seeing STREAMEND because of vbucket movement as part of KV rebalance before Eventing rebalance was kicked off.

|

Around that time, I also see GoCB making calls to request rbac auth for KV node (log snippet):

|

|

2018-04-04T02:15:14.350-07:00 [Info] DynamicAuthenticator::Credentials Authenticating endpoint: 172.23.109.49:11210 bucket: metadata

|

2018-04-04T02:15:14.356-07:00 [Info] DynamicAuthenticator::Credentials Authenticating endpoint: 172.23.109.67:11210 bucket: metadata

|

2018-04-04T02:15:14.356-07:00 [Info] DynamicAuthenticator::Credentials Authenticating endpoint: 172.23.109.49:11210 bucket: metadata

|

2018-04-04T02:15:14.520-07:00 [Info] DynamicAuthenticator::Credentials Authenticating endpoint: 172.23.109.67:11210 bucket: src_bucket

|

|

|

But starting at 2018-04-04T02:16:14.757-07:00, bucket reads/writes via GoCB starts starts throwing "no access" error - which likely means memcached is discarding supplied rbac auth:

|

|

(first entry)

|

2018-04-04T02:16:14.757-07:00 [Error] Consumer::getMetaOpCallback [worker_Function_900706978_test_kv_rebalance_in_when_existing_eventing_node_is_processing_mutation_6:36338:9651] Key: <ud>Function_900706978_test_kv_rebalance_in_when_existing_eventing_node_is_processing_mutation::vb::273</ud> path: <ud>last_processed_seq_no</ud> subdoc lookup failed, err: no access

|

|

(last entry from supplied cbcollect)

|

2018-04-04T02:22:54.898-07:00 [Error] Consumer::getOpCallback [worker_Function_900706978_test_kv_rebalance_in_when_existing_eventing_node_is_processing_mutation_7:33998:9666] Bucket fetch failed for key: <ud>Function_900706978_test_kv_rebalance_in_when_existing_eventing_node_is_processing_mutation::vb::318</ud>, err: no access

|

|

So, the "no access" error lasts for good 6 minutes and Eventing workers aren't able to update metadata - which can basically hang KV rebalance-in in this test case

|

|

➜ cbcollect_info_ns_1@172.23.109.69_20180404-092733 grep "no access" ns_server.eventing.log | wc -l

|

4461

|

|

Thanks,

|

Abhishek

|