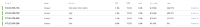

Details

-

Bug

-

Resolution: Fixed

-

Critical

-

6.5.0

-

Enterprise Edition 6.5.0 build 4874

-

Untriaged

-

Centos 64-bit

-

Unknown

Description

Build: 6.5.0-4874

Scenario:

- 4 node cluster, Couchbase bucket with 1K items loaded

- Hard-failover 1 node then add back the failover node

- Rebalance the cluster

Observation:

Seeing rebalance failure with following reason:

Worker <0.10489.1> (for action {move,{1020,

|

['ns_1@172.23.105.205',undefined],

|

['ns_1@172.23.105.206', 'ns_1@172.23.105.205'],

|

[]}}) exited with reason {unexpected_exit,

|

{'EXIT', <0.10650.1>,

|

{{error,

|

{badrpc,

|

{'EXIT',

|

{{{{badmatch, {error, dcp_conn_closed}},

|

[{couch_set_view_group, process_monitor_partition_update, 4,

|

[{file, "/home/couchbase/jenkins/workspace/couchbase-server-unix/couchdb/src/couch_set_view/src/couch_set_view_group.erl"}, {line, 3725}]},

|

{couch_set_view_group, handle_call, 3,

|

[{file, "/home/couchbase/jenkins/workspace/couchbase-server-unix/couchdb/src/couch_set_view/src/couch_set_view_group.erl"}, {line, 934}]},

|

{gen_server, try_handle_call, 4,

|

[{file, "gen_server.erl"}, {line, 636}]},

|

{gen_server, handle_msg, 6,

|

[{file, "gen_server.erl"}, {line, 665}]},

|

{proc_lib, init_p_do_apply, 3,

|

[{file, "proc_lib.erl"}, {line, 247}]}]},

|

{gen_server, call,

|

[<12939.514.0>,

|

{monitor_partition_update, 1020,

|

#Ref<12939.176846531.2340945921.119382>,

|

<12939.564.0>}, infinity]}},

|

{gen_server, call,

|

['capi_set_view_manager-default',

|

{wait_index_updated, 1020}, infinity]}}}}},

|

{gen_server, call,

|

[{'janitor_agent-default', 'ns_1@172.23.105.206'},

|

{if_rebalance, <0.10461.1>,

|

{wait_index_updated, 1020}}, infinity]}}}}

|

|

|

Rebalance exited with reason {mover_crashed,

|

{unexpected_exit,

|

{'EXIT',<0.10650.1>,

|

{{error,

|

{badrpc,

|

{'EXIT',

|

{{{{badmatch,{error,dcp_conn_closed}},

|

[{couch_set_view_group, process_monitor_partition_update,4,

|

[{file, "/home/couchbase/jenkins/workspace/couchbase-server-unix/couchdb/src/couch_set_view/src/couch_set_view_group.erl"}, {line,3725}]},

|

{couch_set_view_group,handle_call,3,

|

[{file, "/home/couchbase/jenkins/workspace/couchbase-server-unix/couchdb/src/couch_set_view/src/couch_set_view_group.erl"}, {line,934}]},

|

{gen_server,try_handle_call,4, [{file,"gen_server.erl"},{line,636}]},

|

{gen_server,handle_msg,6, [{file,"gen_server.erl"},{line,665}]},

|

{proc_lib,init_p_do_apply,3, [{file,"proc_lib.erl"},{line,247}]}]},

|

{gen_server,call,

|

[<12939.514.0>,

|

{monitor_partition_update,1020,

|

#Ref<12939.176846531.2340945921.119382>,

|

<12939.564.0>}, infinity]}},

|

{gen_server,call,

|

['capi_set_view_manager-default',

|

{wait_index_updated,1020}, infinity]}}}}},

|

{gen_server,call,

|

[{'janitor_agent-default', 'ns_1@172.23.105.206'},

|

{if_rebalance,<0.10461.1>,

|

{wait_index_updated,1020}}, infinity]}}}}}.

|

Rebalance Operation Id = f8d81e60511090035968902b399f19b6

|

Cluster state:

Testrunner case:

rebalance.rebalance_progress.RebalanceProgressTests.test_progress_add_back_after_failover,nodes_init=4,nodes_out=1,GROUP=P1,blob_generator=false

|

Note: Reproducibility is not consistent

Attachments

Issue Links

- mentioned in

-

Page Loading...