Details

-

Bug

-

Resolution: Fixed

-

Critical

-

Cheshire-Cat

-

7.0.0-4478

-

Untriaged

-

-

1

-

Unknown

Description

- Create a 17 node cluster

- Create num_buckets=1,num_scopes=1,num_collections=500.

- Create 100000000 items sequentially

- Rebalance in with Loading of docs. Rebalance completed with progress: 100% in 11.5199999809 sec

- Sleep 61 seconds. Reason: Iteration:0 waiting to kill memc on all nodes

- Sleep 72 seconds. Reason: Iteration:1 waiting to kill memc on all nodes

- Rebalance Out with Loading of docs. Rebalance completed with progress: 100% in 97.2890000343 sec

- Sleep 116 seconds. Reason: Iteration:0 waiting to kill memc on all nodes

- Sleep 93 seconds. Reason: Iteration:1 waiting to kill memc on all nodes

- Rebalance In_Out with Loading of docs. Rebalance completed with progress: 100% in 96.7730000019 sec

- Sleep 69 seconds. Reason: Iteration:0 waiting to kill memc on all nodes

- Sleep 99 seconds. Reason: Iteration:1 waiting to kill memc on all nodes

- Swap with Loading of docs. Rebalance completed with progress: 100% in 12.1180000305 sec

- Sleep 81 seconds. Reason: Iteration:0 waiting to kill memc on all nodes

- Sleep 63 seconds. Reason: Iteration:1 waiting to kill memc on all nodes

- Failover a node and RebalanceOut that node with loading in parallel

- Sleep 109 seconds. Reason: Iteration:0 waiting to kill memc on all nodes

- Sleep 87 seconds. Reason: Iteration:1 waiting to kill memc on all nodes

- Failover a node and FullRecovery that node. Rebalance.

|

Rebalance Failure |

Rebalance exited with reason {buckets_shutdown_wait_failed,

|

[{'ns_1@172.23.121.115',

|

{'EXIT',

|

{old_buckets_shutdown_wait_failed,

|

["GleamBookUsers0"]}}}]}.

|

Rebalance Operation Id = 27c0ef1569581833a25f2de5d9300d91

|

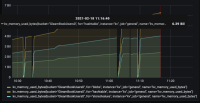

During the test: mem_used is within limits of HWM-LWM.

|

QE Test |

guides/gradlew --refresh-dependencies testrunner -P jython=/opt/jython/bin/jython -P 'args=-i /tmp/test_job_magma.ini -p bucket_storage=couchstore,bucket_eviction_policy=fullEviction,rerun=False -t volumetests.Magma.volume.SystemTestMagma,nodes_init=17,replicas=1,skip_cleanup=True,num_items=100000000,num_buckets=1,bucket_names=GleamBook,doc_size=64,bucket_type=membase,compression_mode=off,iterations=20,batch_size=1000,sdk_timeout=60,log_level=debug,infra_log_level=debug,rerun=False,skip_cleanup=True,key_size=18,randomize_doc_size=False,randomize_value=True,assert_crashes_on_load=True,maxttl=60,num_buckets=1,num_scopes=1,num_collections=500,doc_ops=create:update,durability=None,crashes=1,sdk_client_pool=True -m rest'

|