Details

-

Bug

-

Resolution: Duplicate

-

Test Blocker

-

Cheshire-Cat

-

Untriaged

-

1

-

Unknown

Description

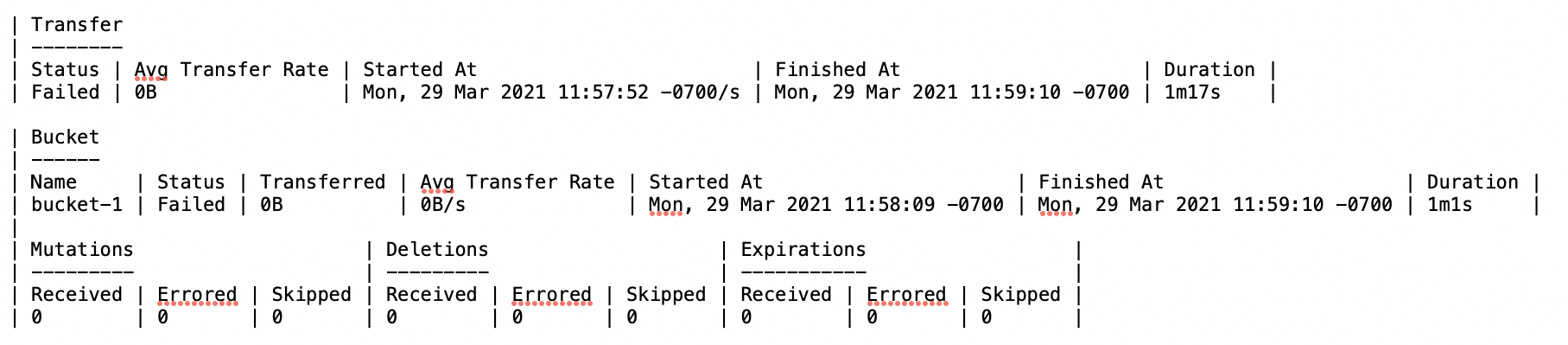

In our performance runs, we saw backup failed because operation has timed out.

Build: 7.0.0-4797

root@ubuntu:/tmp/magma_backup# ./opt/couchbase/bin/cbbackupmgr backup -archive /tmp/backup --repo default --host http://172.23.105.72 -username Administrator --password password --threads 16 --storage sqlite

Backing up to '2021-03-29T11_57_52.872118914-07_00'

Transferring key value data for bucket 'bucket-1' at 0B/s (about 1h59m42s remaining) 0 items / 0B

[== ] 1.06%

Error backing up cluster: operation has timed out

Logs

Server logs:

https://s3.amazonaws.com/bugdb/jira/qe/collectinfo-2021-03-29T214013-ns_1%40172.23.105.72.zip

https://s3.amazonaws.com/bugdb/jira/qe/collectinfo-2021-03-29T214013-ns_1%40172.23.105.73.zip

https://s3.amazonaws.com/bugdb/jira/qe/collectinfo-2021-03-29T214013-ns_1%40172.23.105.78.zip

Attachments

Issue Links

- duplicates

-

GOCBC-1073 DCP can get stuck during closing

-

- Resolved

-

- relates to

-

MB-45320 [Magma] Backup performance tests failed on 7.0.0-4797

-

- Closed

-