Details

-

Bug

-

Resolution: Fixed

-

Major

-

7.1.0

-

Untriaged

-

1

-

Yes

Description

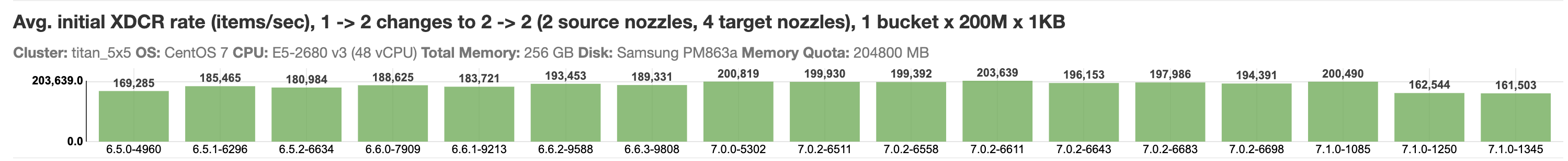

| Build | Avg. initial XDCR rate | Job |

|---|---|---|

| 7.1.0-1085 | 200,490 | http://perf.jenkins.couchbase.com/job/titan/11564/ |

| 7.1.0-1250 | 162,544 | http://perf.jenkins.couchbase.com/job/titan/12048/ |