Details

-

Improvement

-

Resolution: Unresolved

-

Major

-

7.1.0

Description

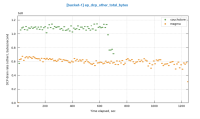

I re-run two existing XDCR tests with Magma. Compared to Couchstore, Magma performance is about 50% lower. I open this ticket to track XDCR+Magma performance improvement. All runs were running on build 7.1.0-1401.

Avg. initial XDCR rate (items/sec), 1 -> 1 (2 source nozzles, 4 target nozzles), 1 bucket x 100M x 1KB

| Storage | XDCR rate | Job |

|---|---|---|

| Couchstore | 141381 | http://perf.jenkins.couchbase.com/job/titan/12218/ |

| Magma | 79794 | http://perf.jenkins.couchbase.com/job/titan/12214/ |

Avg. initial XDCR rate (items/sec), 5 -> 5 (2 source nozzles, 4 target nozzles), 1 bucket x 250M x 1KB

| Storage | Heading 2 | Job |

|---|---|---|

| Couchstore | 619398 | http://perf.jenkins.couchbase.com/job/titan/12217/ |

| Magma | 343931 | http://perf.jenkins.couchbase.com/job/titan/12215/ |

Attachments

Issue Links

Gerrit Reviews

| For Gerrit Dashboard: MB-48834 | ||||||

|---|---|---|---|---|---|---|

| # | Subject | Branch | Project | Status | CR | V |

| 168943,2 | MB-48834 util/file: Add support for sync_file_range | master | magma | Status: NEW | 0 | +1 |