Details

-

Bug

-

Resolution: Fixed

-

Critical

-

master, 7.1.4, 7.1.1, 7.1.2, 7.1.3

-

Untriaged

-

0

-

Unknown

-

KV 2023-2

Description

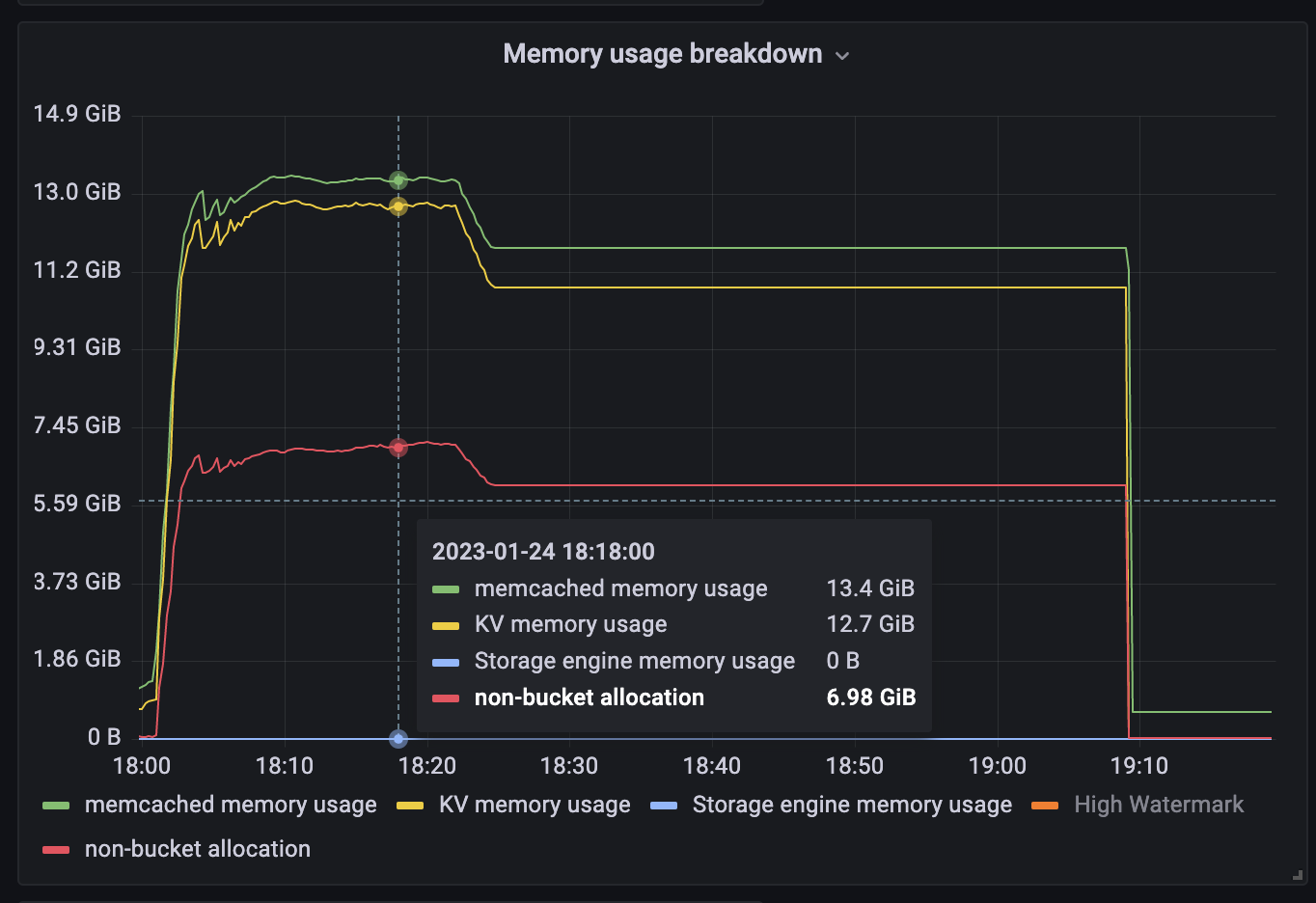

We can cause the ep_arena_global:allocated/kv_daemon_memory_allocated_bytes/non-bucket allocation size to grow to an arbitrary amount by running a mutation-heavy workload with item sizes <4k.

I've managed to reproduce on 7.1.1, 71.2, 7.1.3, and current master, with Magma and Couchstore buckets.

Repro

To reproduce:

1. Create a bucket (bucket configuration appears irrelevant)

2. Run cbc-pillowfight -I 10000000 -m 4000 -M 4000 -U couchbase://localhost/test -u Administrator -P $PASSWORD -r 100

3. Observe the kv_daemon_memory_allocated_bytes climb to ~7 GiB.

(non-bucket allocation is kv_daemon_memory_allocated_bytes). Notice that KV memory usage (kv_mem_used_bytes) + non-bucket allocation is > process memory usage, which is wrong (no swap configured).

Cause

I've managed to track this bug down to https://review.couchbase.org/c/platform/+/174991 (introduced in 7.1.1). Reverting that change fixes the inaccurate stat.

Before the change, we used to manually initialise and toggle jemalloc's tcache. The change removes that manual control and instead leaves it up to jemalloc. The change also suggests "possible some "drift" in the ArenaMalloc stats".

More work is necessary to determine exactly why this is manifesting in this way.

Impact

One of the ways in which we manage memory fragmentation in KV-Engine is by actively defragmenting item allocations. We do this using a background task called the DefragmenterTask which runs for each bucket. The rate at which this task runs is determined by the defragmenter_mode configuration parameter, which can be one of auto_pid (the default), auto_linear and static (the old default).

The only place where this stat is used is in the DefragmenterTask and only in auto_pid (default) and auto_linear modes. These two modes use the kv_daemon_memory_allocated_bytes and kv_daemon_memory_resident_bytes (both stats are affected) to determine fragmentation and increase/decrease fragmentation rate.

In effect, when this stat is incorrect, the defragmenter could run at an unexpected rate, allowing for greater than normal memory fragmentation.

We're not aware of any other possible adverse effects and we're not using this stat for anything else internally.

All other memory stats are accounted for independently and will have their correct values.

| Issue | Resolution |

| A shared allocation cache (tcache) between buckets resulted in a stats drift. This caused higher-than-normal memory fragmentation. | Dedicated tcaches are now used for buckets. jemalloc has been changed to support increased numbers of tcaches. |