Details

-

Bug

-

Resolution: Fixed

-

Critical

-

2.0.1

-

Security Level: Public

-

None

-

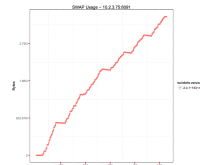

Seen on the latest few builds

Observed on:

2.0.1-153 Linux

4 Core 30GB SSD machines

Description

- Setup a 7 node cluster,

- RAM (for each physical machine): ~32GB

- create 2 buckets: default (bucket quota: 12000MB per node) and saslbucket (bucket quota: 7000MB per node)

- run a load (with just creates), until the item count hits a mark

- create 2 views over each of the buckets

- Run load that pushes system into DGM, ~80% resident ratio for default, and ~60% resident ratio for saslbucket

- Run queries continuously against the views as the load continues.

(for e.g.:

curl -X GET 'http://10.1.3.235:8092/default/_design/d1/_view/v1?startkey=0&stale=ok&limit=1000') - Noticed the increased swap usage.

- Start a new mixed load on both the buckets (creates, updates, deletes, expires).

- Setup XDCR to another 5 node cluster.

- Continue querying, noticed considerable increase in swap usage.

This behavior is seen with a cluster with views as well (no XDCR involved).