Details

-

New Feature

-

Resolution: Fixed

-

Major

-

None

-

None

-

0

Description

There is certain amount of tasks that the administrator has to perform to prepare cluster for using this feature and things to keep in mind:

- Moving servers between Server Groups updates the clustermap immediately, but to move the data, an administrator MUST perform rebalance. Until the rebalance is complete, the SDK will see and be able to "use" the new server groups, but the vBucketMap might still arrange data in the previous locations. For any keys in this state (until the rebalance is complete) the SDK will think they're available in that Server Group (because we have the new clustermap), but they are not actually in that Server Group yet. So anyone using this feature for "Zone Aware Replica Reads" may not be performing the reads in the intended zone until the rebalance is complete.

- The feature is pretty sensitive on how many replicas, nodes, and groups defined. The following table shows some possible combinations

Number Of Replicas Number of Nodes Number of Server Groups Result > 0 1 any Unbalanced, not enough nodes to keep active and replicas separate 1 2n, n>=1 2 Good, each group gets either active or replica copy of the document, and same number of the nodes 1 2n+1, n>=1 2 Enough nodes to replicate documents, but still Unbalanced because of odd number of the nodes and even number of groups:

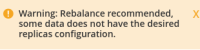

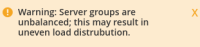

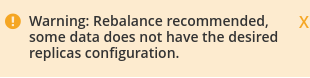

1 any 3 Unbalanced, while it is enough nodes for replica distribution, it is not enough nodes to cover all vBuckets in every group, so non-Local requests would be inevitable, as each group would cover only 681 vBuckets at best in case of 6 nodes, two copies of the document cannot be presented in each of the group. Unfortunately no warning from the server in this case. 2 2n, n>=1 2 Good, but because here the cluster will put more than one copy into group, it is possible that when SDK does local reads, it will receive more than one copy. 2 3n, n>=1 3 Good, each group will have either replica, or active copy of the document. 3 any any The server disables sync writes for 3 replicas as documented in DOC-12182, so this mode is not very practical, although the same logic applies, and it would be possible to configure 4 groups where local reads will always return something without doing expensive calls to other groups any any >4 Unbalanced in terms of the feature, because maximum possible copies of the each document (active+replica) is 4, so some reads will have to reach to non-local server group So the rule here is kind of common sense, that the cluster should have enough nodes and group to make sure that copies of the same document are not stored on the same node, and each group has nodes that cover all 1024 vbuckets (in other words, the number of the groups does not exceeds number of the copies (active+num_replicas)). The Admin UI should emit small yellow warning if the configuration is considered unbalanced,

|

| - Three replicas for the bucket disables durability of sync writes, rendering transactions not very useful. See DOC-12182

The validation of the topology is not in the scope of the SDK RFC, or implementation, this is why it is important that server docs will help people to prepare and validate their setup. Right now I have tool, which I plan to cleanup and release as community project. This tool can visualize changes in cluster topology in real time, as long as tell when the cluster is not balanced well for the local reads. It creates diagrams like this (I only tested it on Mac and Linux). Large rectangles here represet active copies, small – replicas, and the different servers are color-coded. On the right it shows how many vBuckets each group covers.