Description

Couchbase Cluster Description

- Set up the cluster as per the required specifications

- Each node is an m5.4xlarge instance. (16 vCPUs and 64GB RAM)

- 6 Data Service, 4 Index Service and Query Service Nodes.

- 10 Buckets (with 1 replica), Full Eviction and Auto-failover set to 5s.

- ~210GB data per bucket → ~2TB data loaded onto cluster.

- 50 Primary Indexes with 1 Replica each. (Total 100 Indexes)

CAO operator [2.6.4]

Steps of Exercise Performed :-

- Upgraded 7.2.4 to 7.2.5 successful.

- Upgraded EKS 1.25 to 1.26

Successful Upgrade from CB. 7.2.4 to 7.2.5. but during EKS Upgrade 1.25 to 1.26 on 7.2.5, we noticed node 04 went down during the rebalance of node 0003 addition.

Topology of CB Cluster Pods

| CB Server Pod | Node address |

|---|---|

| cb-example-0000 | ip-10-0-1-100.us-east-2.compute.internal |

| cb-example-0001 | ip-10-0-1-121.us-east-2.compute.internal |

| cb-example-0002 | ip-10-0-2-164.us-east-2.compute.internal |

| cb-example-0003 | ip-10-0-3-47.us-east-2.compute.internal |

| cb-example-0004 | ip-10-0-2-57.us-east-2.compute.internal |

| cb-example-0005 | ip-10-0-3-64.us-east-2.compute.internal |

| cb-example-0006 | ip-10-0-1-188.us-east-2.compute.internal |

| cb-example-0007 | ip-10-0-3-151.us-east-2.compute.internal |

| cb-example-0008 | ip-10-0-1-44.us-east-2.compute.internal |

| cb-example-0009 | ip-10-0-2-57.us-east-2.compute.internal |

Note - All node are on different worker nodes.

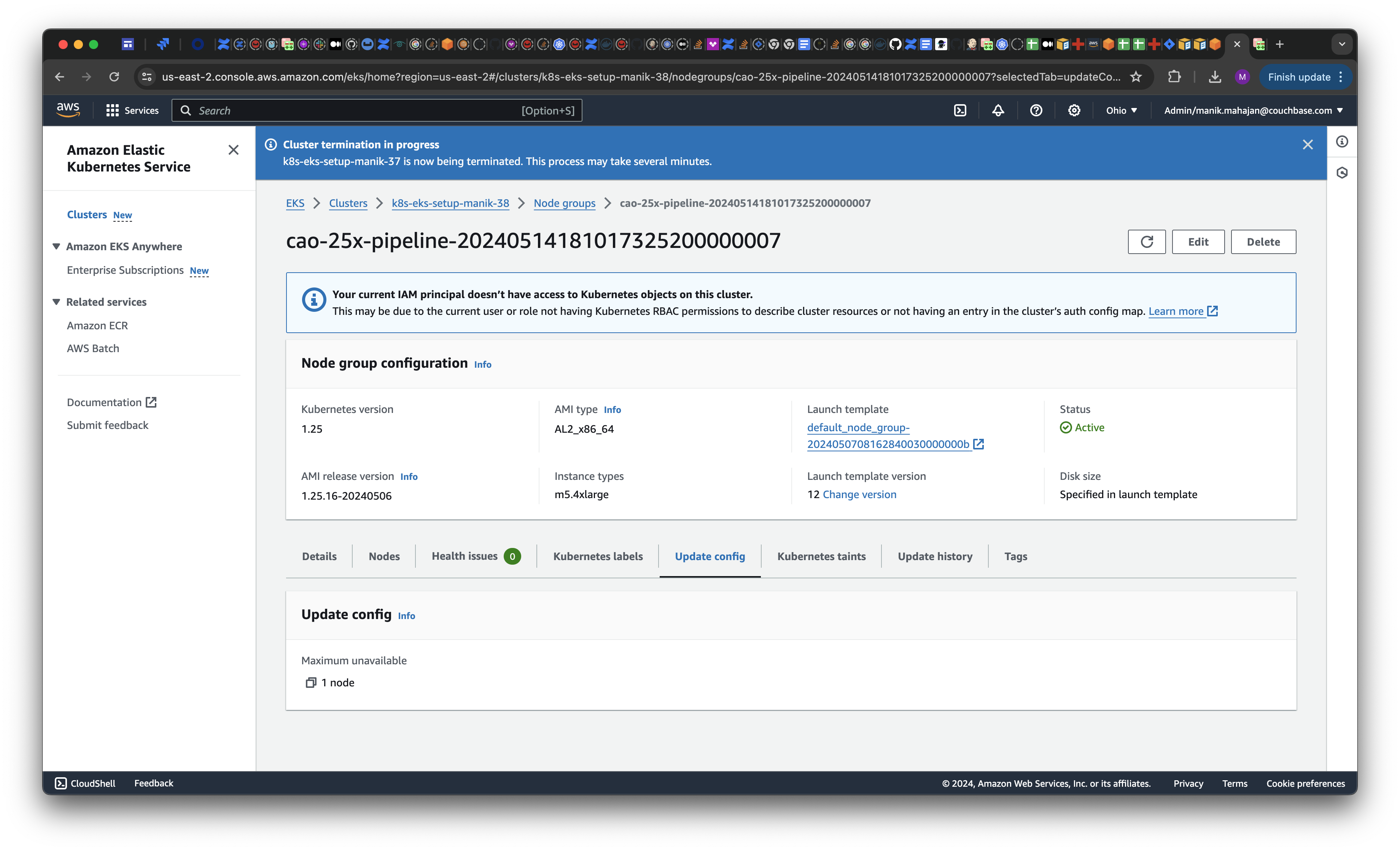

Note - The UpdateConfig of Amazon EKS managed worker is set to default that is 1. Hence, only one worked node upgrade to new K8s version.

Logs Analysis:-

- It appears like at 2024-05-13T13:22 the EKS upgrade activity started. Started bringing down node 0009.

Auto failover completed for node 09:

{"timestamp":"2024-05-13T13:22:30.984Z","event_id":15,"component":"ns_server","description":"Auto failover completed","severity":"info","node":"cb-example-0000.cb-example.default.svc","otp_node":"ns_1@cb-example-0000.cb-example.default.svc","uuid":"0f1ee0d1-dc10-4131-b36c-1dab790c8844","extra_attributes":{"operation_id":"1cbfce813fcf0d808141db836e199551","nodes_info":

|

{"active_nodes":["ns_1@cb-example-0000.cb-example.default.svc","ns_1@cb-example-0001.cb-example.default.svc","ns_1@cb-example-0002.cb-example.default.svc","ns_1@cb-example-0003.cb-example.default.svc","ns_1@cb-example-0004.cb-example.default.svc","ns_1@cb-example-0005.cb-example.default.svc","ns_1@cb-example-0006.cb-example.default.svc","ns_1@cb-example-0007.cb-example.default.svc","ns_1@cb-example-0008.cb-example.default.svc","ns_1@cb-example-0009.cb-example.default.svc"],"failover_nodes":["ns_1@cb-example-0009.cb-example.default.svc"],"master_node":"ns_1@cb-example-0000.cb-example.default.svc"},"time_taken":2376,"completion_message":"Failover completed successfully."}}

|

The node was not rebalanced out of the cluster. The node was upgraded and added back to the cluster and rebalance initiated.

{"timestamp":"2024-05-13T13:24:15.566Z","event_id":2,"component":"ns_server","description":"Rebalance initiated","severity":"info","node":"cb-example-0000.cb-example.default.svc","otp_node":"ns_1@cb-example-0000.cb-example.default.svc","uuid":"dc2f90d8-8f4d-4fc4-a1c2-c98f540f7d55","extra_attributes":{"operation_id":"0a7034f9884ad197d6017aae4b0abdae","nodes_info":

|

{"active_nodes":["ns_1@cb-example-0000.cb-example.default.svc","ns_1@cb-example-0001.cb-example.default.svc","ns_1@cb-example-0002.cb-example.default.svc","ns_1@cb-example-0003.cb-example.default.svc","ns_1@cb-example-0004.cb-example.default.svc","ns_1@cb-example-0005.cb-example.default.svc","ns_1@cb-example-0006.cb-example.default.svc","ns_1@cb-example-0007.cb-example.default.svc","ns_1@cb-example-0008.cb-example.default.svc","ns_1@cb-example-0009.cb-example.default.svc"],"keep_nodes":["ns_1@cb-example-0000.cb-example.default.svc","ns_1@cb-example-0001.cb-example.default.svc","ns_1@cb-example-0002.cb-example.default.svc","ns_1@cb-example-0003.cb-example.default.svc","ns_1@cb-example-0004.cb-example.default.svc","ns_1@cb-example-0005.cb-example.default.svc","ns_1@cb-example-0006.cb-example.default.svc","ns_1@cb-example-0007.cb-example.default.svc","ns_1@cb-example-0008.cb-example.default.svc","ns_1@cb-example-0009.cb-example.default.svc"],"eject_nodes":[],"delta_nodes":[],"failed_nodes":[]}}}

|

Rebalance completed

{"timestamp":"2024-05-13T13:24:52.795Z","event_id":3,"component":"ns_server","description":"Rebalance completed","severity":"info","node":"cb-example-0000.cb-example.default.svc","otp_node":"ns_1@cb-example-0000.cb-example.default.svc","uuid":"559b0a99-2c9b-4137-a81f-f0dfcdc383b9","extra_attributes":{"operation_id":"0a7034f9884ad197d6017aae4b0abdae","nodes_info":

|

{"active_nodes":["ns_1@cb-example-0000.cb-example.default.svc","ns_1@cb-example-0001.cb-example.default.svc","ns_1@cb-example-0002.cb-example.default.svc","ns_1@cb-example-0003.cb-example.default.svc","ns_1@cb-example-0004.cb-example.default.svc","ns_1@cb-example-0005.cb-example.default.svc","ns_1@cb-example-0006.cb-example.default.svc","ns_1@cb-example-0007.cb-example.default.svc","ns_1@cb-example-0008.cb-example.default.svc","ns_1@cb-example-0009.cb-example.default.svc"],"keep_nodes":["ns_1@cb-example-0000.cb-example.default.svc","ns_1@cb-example-0001.cb-example.default.svc","ns_1@cb-example-0002.cb-example.default.svc","ns_1@cb-example-0003.cb-example.default.svc","ns_1@cb-example-0004.cb-example.default.svc","ns_1@cb-example-0005.cb-example.default.svc","ns_1@cb-example-0006.cb-example.default.svc","ns_1@cb-example-0007.cb-example.default.svc","ns_1@cb-example-0008.cb-example.default.svc","ns_1@cb-example-0009.cb-example.default.svc"],"eject_nodes":[],"delta_nodes":[],"failed_nodes":[]},"time_taken":37229,"completion_message":"Rebalance completed successfully."}}

|

Later, node 0007 was upgraded in the same fashion.

Now its 0003's turn. Node was failed over at 2024-05-13T13:28.

{"timestamp":"2024-05-13T13:28:44.297Z","event_id":15,"component":"ns_server","description":"Auto failover completed","severity":"info","node":"cb-example-0000.cb-example.default.svc","otp_node":"ns_1@cb-example-0000.cb-example.default.svc","uuid":"cc4ad459-8fe0-4aa8-be4f-4b33ebb361b1","extra_attributes":{"operation_id":"aa9d780ab8070a717de6f8178b4efd15","nodes_info":

|

{"active_nodes":["ns_1@cb-example-0000.cb-example.default.svc","ns_1@cb-example-0001.cb-example.default.svc","ns_1@cb-example-0002.cb-example.default.svc","ns_1@cb-example-0003.cb-example.default.svc","ns_1@cb-example-0004.cb-example.default.svc","ns_1@cb-example-0005.cb-example.default.svc","ns_1@cb-example-0006.cb-example.default.svc","ns_1@cb-example-0007.cb-example.default.svc","ns_1@cb-example-0008.cb-example.default.svc","ns_1@cb-example-0009.cb-example.default.svc"],"failover_nodes":["ns_1@cb-example-0003.cb-example.default.svc"],"master_node":"ns_1@cb-example-0000.cb-example.default.svc"},"time_taken":1015,"completion_message":"Failover completed successfully."}}

|

rebalance initiated at 2024-05-13T13:35 to delta recover the node 0003.

{"timestamp":"2024-05-13T13:35:40.425Z","event_id":2,"component":"ns_server","description":"Rebalance initiated","severity":"info","node":"cb-example-0000.cb-example.default.svc","otp_node":"ns_1@cb-example-0000.cb-example.default.svc","uuid":"30a70a64-8a13-417d-a832-1e553b457c85","extra_attributes":{"operation_id":"b7d215118f0ab480859a1b351f16c00d","nodes_info":

|

{"active_nodes":["ns_1@cb-example-0000.cb-example.default.svc","ns_1@cb-example-0001.cb-example.default.svc","ns_1@cb-example-0002.cb-example.default.svc","ns_1@cb-example-0003.cb-example.default.svc","ns_1@cb-example-0004.cb-example.default.svc","ns_1@cb-example-0005.cb-example.default.svc","ns_1@cb-example-0006.cb-example.default.svc","ns_1@cb-example-0007.cb-example.default.svc","ns_1@cb-example-0008.cb-example.default.svc","ns_1@cb-example-0009.cb-example.default.svc"],"keep_nodes":["ns_1@cb-example-0000.cb-example.default.svc","ns_1@cb-example-0001.cb-example.default.svc","ns_1@cb-example-0002.cb-example.default.svc","ns_1@cb-example-0003.cb-example.default.svc","ns_1@cb-example-0004.cb-example.default.svc","ns_1@cb-example-0005.cb-example.default.svc","ns_1@cb-example-0006.cb-example.default.svc","ns_1@cb-example-0007.cb-example.default.svc","ns_1@cb-example-0008.cb-example.default.svc","ns_1@cb-example-0009.cb-example.default.svc"],"eject_nodes":[],"delta_nodes":["ns_1@cb-example-0003.cb-example.default.svc"],"failed_nodes":[]}}}

|

While this rebalance is still in progress, node 004 went down for the upgrade. This node could not be auto failed over since 0003 was already failed over and not part of cluster yet.

{"timestamp":"2024-05-13T13:37:30.281Z","event_id":20,"component":"ns_server","description":"Node Down","severity":"warning","node":"cb-example-0009.cb-example.default.svc","otp_node":"ns_1@cb-example-0009.cb-example.default.svc","uuid":"10315522-d098-421b-b76b-57a03947f7ef","extra_attributes":{"down_node":"ns_1@cb-example-0004.cb-example.default.svc","reason":"[{nodedown_reason,connection_closed}]"}} {"timestamp":"2024-05-13T13:37:35.863Z","event_id":17,"component":"ns_server","description":"Auto failover warning","severity":"warn","node":"cb-example-0000.cb-example.default.svc","otp_node":"ns_1@cb-example-0000.cb-example.default.svc","uuid":"432bafe8-7fab-4514-8834-d454a48a6db9","extra_attributes":{"reason":"Maximum number of auto-failover nodes (1) has been reached.","nodes":["ns_1@cb-example-0004.cb-example.default.svc"]}}

|

Then the rebalance failed because node 0004 is down.

{"timestamp":"2024-05-13T13:41:13.197Z","event_id":4,"component":"ns_server","description":"Rebalance failed","severity":"error","node":"cb-example-0000.cb-example.default.svc","otp_node":"ns_1@cb-example-0000.cb-example.default.svc","uuid":"c868553f-e41b-4e8e-8b69-4c2f88e0b854","extra_attributes":{"operation_id":"b7d215118f0ab480859a1b351f16c00d","nodes_info":{"active_nodes":["ns_1@cb-example-0000.cb-example.default.svc","ns_1@cb-example-0001.cb-example.default.svc","ns_1@cb-example-0002.cb-example.default.svc","ns_1@cb-example-0003.cb-example.default.svc","ns_1@cb-example-0004.cb-example.default.svc","ns_1@cb-example-0005.cb-example.default.svc","ns_1@cb-example-0006.cb-example.default.svc","ns_1@cb-example-0007.cb-example.default.svc","ns_1@cb-example-0008.cb-example.default.svc","ns_1@cb-example-0009.cb-example.default.svc"],"keep_nodes":["ns_1@cb-example-0000.cb-example.default.svc","ns_1@cb-example-0001.cb-example.default.svc","ns_1@cb-example-0002.cb-example.default.svc","ns_1@cb-example-0003.cb-example.default.svc","ns_1@cb-example-0004.cb-example.default.svc","ns_1@cb-example-0005.cb-example.default.svc","ns_1@cb-example-0006.cb-example.default.svc","ns_1@cb-example-0007.cb-example.default.svc","ns_1@cb-example-0008.cb-example.default.svc","ns_1@cb-example-0009.cb-example.default.svc"],"eject_nodes":[],"delta_nodes":["ns_1@cb-example-0003.cb-example.default.svc"],"failed_nodes":[]},"time_taken":333084,"completion_message":"Rebalance exited with reason {{badmatch,\n {error,\n

|

{failed_nodes,\n ['ns_1@cb-example-0003.cb-example.default.svc']}}},\n [{ns_janitor,cleanup_apply_config_body,4,\n [

|

{file,\"src/ns_janitor.erl\"}

|

,\{line,295}]},\n {ns_janitor,'{-}cleanup_apply_config/4-fun-0{-}',\n 4,\n [\{file,"src/ns_janitor.erl"}

|

,\{line,215}]},\n {async,'{-}async_init/4-fun-1{-}',3,\n [

|

{file,\"src/async.erl\"}

|

,\{line,191}]}]}."}}

|

Now node 0004 started coming up.

{"timestamp":"2024-05-13T13:43:42.437Z","event_id":1,"component":"ns_server","description":"Service started","severity":"info","node":"cb-example-0004.cb-example.default.svc","otp_node":"ns_1@cb-example-0004.cb-example.default.svc","uuid":"4472c76e-cebe-4bd7-87e2-04c0d01e1f90","extra_attributes":\{"name":"ns_server"}}

|

After this, both nodes 0003 and 0004 were added back to the cluster.

{"timestamp":"2024-05-13T13:51:54.166Z","event_id":3,"component":"ns_server","description":"Rebalance completed","severity":"info","node":"cb-example-0000.cb-example.default.svc","otp_node":"ns_1@cb-example-0000.cb-example.default.svc","uuid":"b97c3919-3daa-4446-8d34-42b1b63974e6","extra_attributes":{"operation_id":"b8bd1d7c5b169bb59910247e63eca6b9","nodes_info":{"active_nodes":["ns_1@cb-example-0000.cb-example.default.svc","ns_1@cb-example-0001.cb-example.default.svc","ns_1@cb-example-0002.cb-example.default.svc","ns_1@cb-example-0003.cb-example.default.svc","ns_1@cb-example-0004.cb-example.default.svc","ns_1@cb-example-0005.cb-example.default.svc","ns_1@cb-example-0006.cb-example.default.svc","ns_1@cb-example-0007.cb-example.default.svc","ns_1@cb-example-0008.cb-example.default.svc","ns_1@cb-example-0009.cb-example.default.svc"],"keep_nodes":["ns_1@cb-example-0000.cb-example.default.svc","ns_1@cb-example-0001.cb-example.default.svc","ns_1@cb-example-0002.cb-example.default.svc","ns_1@cb-example-0003.cb-example.default.svc","ns_1@cb-example-0004.cb-example.default.svc","ns_1@cb-example-0005.cb-example.default.svc","ns_1@cb-example-0006.cb-example.default.svc","ns_1@cb-example-0007.cb-example.default.svc","ns_1@cb-example-0008.cb-example.default.svc","ns_1@cb-example-0009.cb-

|

If at a point a new node (B) is auto failed over with previous node (A) still rebalancing may lose data of node A if replicated onto Node B. ( #Replicas = 1 )

Need to investigate:

1. Why was node 004 down during the rebalance of node 0003 addition?

2. Was it part of the part of upgrade or any other issues?

CB Collect - https://supportal.couchbase.com/snapshot/8387b5feeb1f87c29dfab6ecb4add56a::1

Operator Logs-

cbopinfo-20240513T192014+0530.tar.gz![]()

SS of UpdateConfig of Node Group

Attachments

Issue Links

- mentioned in

-

Page Loading...