Description

Couchbase Cluster Description

- Set up the cluster as per the required specifications

- Each node is an m5.4xlarge instance. (16 vCPUs and 64GB RAM)

- 6 Data Service, 4 Index Service and Query Service Nodes.

- 10 Buckets (with 1 replica), Full Eviction and Auto-failover set to 5s.

- ~100 data per bucket → ~1TB data loaded onto cluster.

- 50 Primary Indexes with 1 Replica each. (Total 100 Indexes)

- Continuous data and query workload on all buckets during the update process.

- Attached cloud watch and fluent it for forwarding logs

Task : Upgrade EKS 1.25 -> 1.26

Observation:-

- EKS upgrade for K8S control plane completed successfully.

- Upon Worker node upgrade, a pod is drained and recreated on a node with an updated kubelet version, the operation is still waiting for the failover event and hasn't reconciled and no rebalance occurred.

- The EKS upgrade moved to another node to be updated and drained other CB pods.

{"level":"info","ts":"2024-05-31T11:37:55Z","logger":"cluster","msg":"Reconciliation failed","cluster":"default/cb-example","error":"reconcile was blocked from running: waiting for pod failover","stack":"github.com/couchbase/couchbase-operator/pkg/cluster.(*ReconcileMachine).handleDownNodes\n\tgithub.com/couchbase/couchbase-operator/pkg/cluster/nodereconcile.go:435\ngithub.com/couchbase/couchbase-operator/pkg/cluster.(*ReconcileMachine).exec\n\tgithub.com/couchbase/couchbase-operator/pkg/cluster/nodereconcile.go:319\ngithub.com/couchbase/couchbase-operator/pkg/cluster.(*Cluster).reconcileMembers\n\tgithub.com/couchbase/couchbase-operator/pkg/cluster/reconcile.go:268\ngithub.com/couchbase/couchbase-operator/pkg/cluster.(*Cluster).reconcile\n\tgithub.com/couchbase/couchbase-operator/pkg/cluster/reconcile.go:177\ngithub.com/couchbase/couchbase-operator/pkg/cluster.(*Cluster).runReconcile\n\tgithub.com/couchbase/couchbase-operator/pkg/cluster/cluster.go:492\ngithub.com/couchbase/couchbase-operator/pkg/cluster.(*Cluster).Update\n\tgithub.com/couchbase/couchbase-operator/pkg/cluster/cluster.go:535\ngithub.com/couchbase/couchbase-operator/pkg/controller.(*CouchbaseClusterReconciler).Reconcile\n\tgithub.com/couchbase/couchbase-operator/pkg/controller/controller.go:90\nsigs.k8s.io/controller-runtime/pkg/internal/controller.(*Controller).Reconcile\n\tsigs.k8s.io/controller-runtime@v0.16.3/pkg/internal/controller/controller.go:119\nsigs.k8s.io/controller-runtime/pkg/internal/controller.(*Controller).reconcileHandler\n\tsigs.k8s.io/controller-runtime@v0.16.3/pkg/internal/controller/controller.go:316\nsigs.k8s.io/controller-runtime/pkg/internal/controller.(*Controller).processNextWorkItem\n\tsigs.k8s.io/controller-runtime@v0.16.3/pkg/internal/controller/controller.go:266\nsigs.k8s.io/controller-runtime/pkg/internal/controller.(*Controller).Start.func2.2\n\tsigs.k8s.io/controller-runtime@v0.16.3/pkg/internal/controller/controller.go:227"}

|

{"level":"info","ts":"2024-05-31T11:37:55Z","logger":"cluster","msg":"Resource updated","cluster":"default/cb-example","diff":"+{v2.ClusterStatus}.Conditions[?->2]:{Type:Error Status:True LastUpdateTime:2024-05-31T11:37:55Z LastTransitionTime:2024-05-31T11:37:55Z Reason:ErrorEncountered Message:reconcile was blocked from running: waiting for pod failover}"}

|

Cloud Watch - https://us-east-2.console.aws.amazon.com/cloudwatch/home?region=us-east-2#logsV2:log-groups$3FlogGroupNameFilter$3Dmanik-58

Pre Upgrade pod information -

Name READY STATUS RESTARTS AGE IP NODE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

|

cb-example-0000 3/3 Running 0 41m 10.0.5.44 ip-10-0-5-106.us-east-2.compute.internal <none> 1/1

|

cb-example-0001 3/3 Running 0 40m 10.0.4.70 ip-10-0-4-33.us-east-2.compute.internal <none> 1/1

|

cb-example-0002 3/3 Running 0 40m 10.0.8.196 ip-10-0-11-224.us-east-2.compute.internal <none> 1/1

|

cb-example-0003 3/3 Running 0 40m 10.0.12.219 ip-10-0-12-44.us-east-2.compute.internal <none> 1/1

|

cb-example-0004 3/3 Running 0 40m 10.0.8.185 ip-10-0-11-193.us-east-2.compute.internal <none> 1/1

|

cb-example-0005 3/3 Running 0 40m 10.0.14.254 ip-10-0-14-251.us-east-2.compute.internal <none> 1/1

|

cb-example-0006 3/3 Running 0 40m 10.0.8.6 ip-10-0-10-236.us-east-2.compute.internal <none> 1/1

|

cb-example-0007 3/3 Running 0 40m 10.0.6.130 ip-10-0-6-196.us-east-2.compute.internal <none> 1/1

|

cb-example-0008 3/3 Running 0 40m 10.0.14.9 ip-10-0-13-12.us-east-2.compute.internal <none> 1/1

|

cb-example-0009 3/3 Running 0 40m 10.0.7.5 ip-10-0-4-14.us-east-2.compute.internal <none> 1/1

|

couchbase-operator-5d887864c9-gsvw6 1/1 Running 3 (45m ago) 52m 10.0.6.29 ip-10-0-5-177.us-east-2.compute.internal <none> <none>

|

couchbase-operator-admission-8f64bf8c8-4sl8d 1/1 Running 0 52m 10.0.10.206 ip-10-0-10-50.us-east-2.compute.internal <none> <none>

|

Operator logs in between upgrade :- cbopinfo-20240531T174952+0530.tar.gz![]()

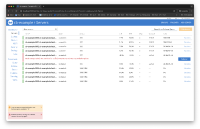

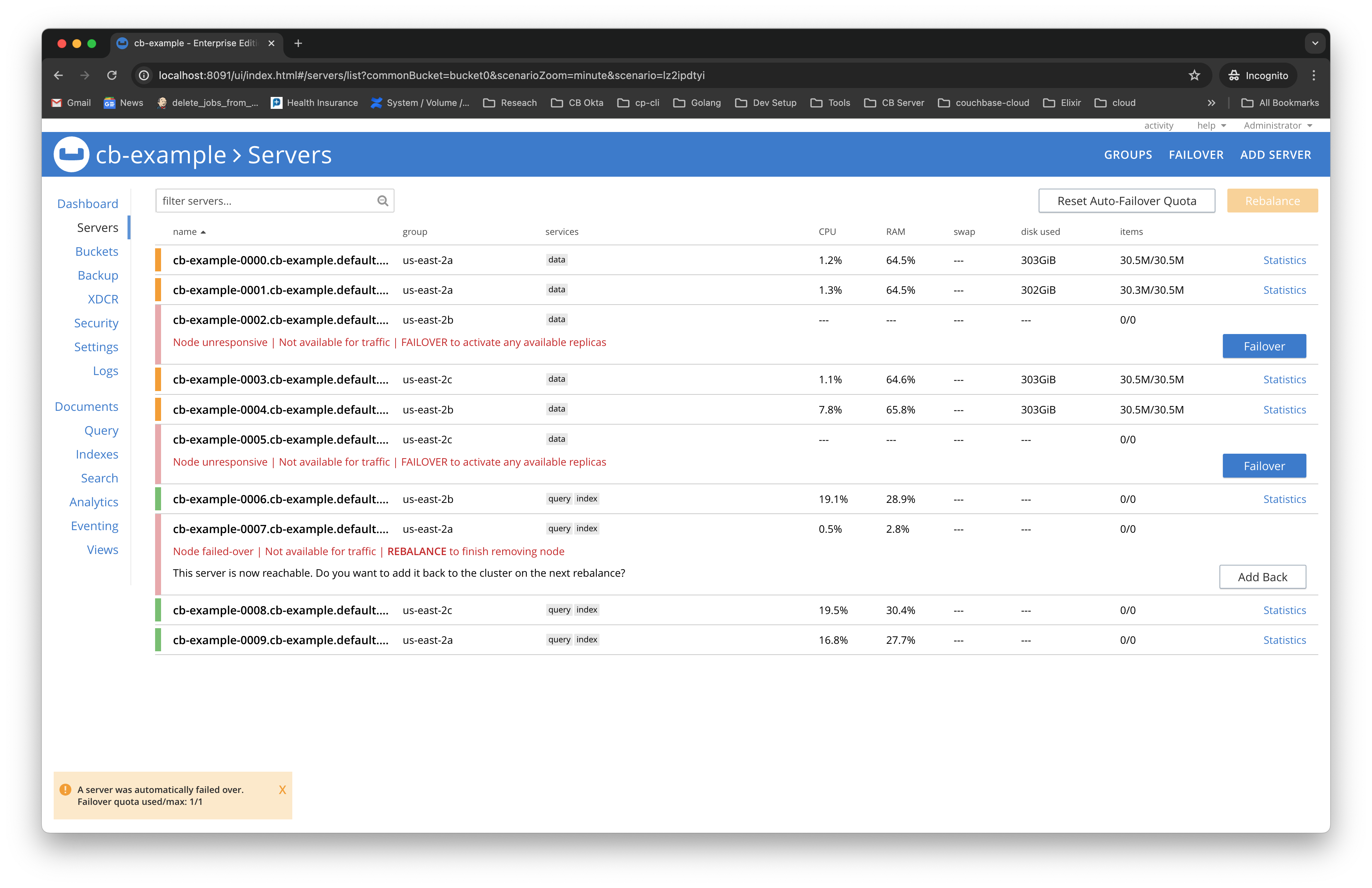

Cluster SS :-

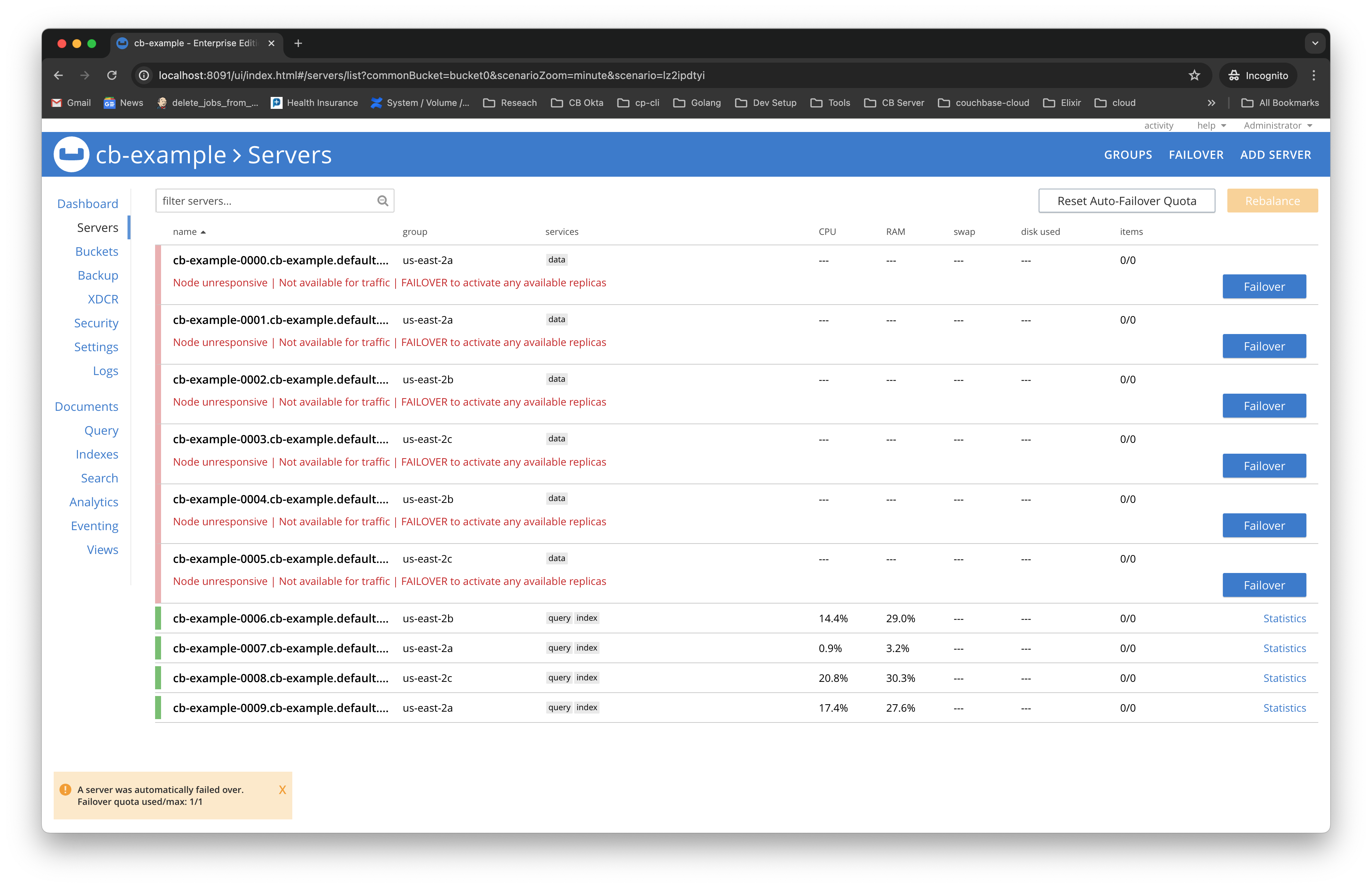

Pods and node information SS:-

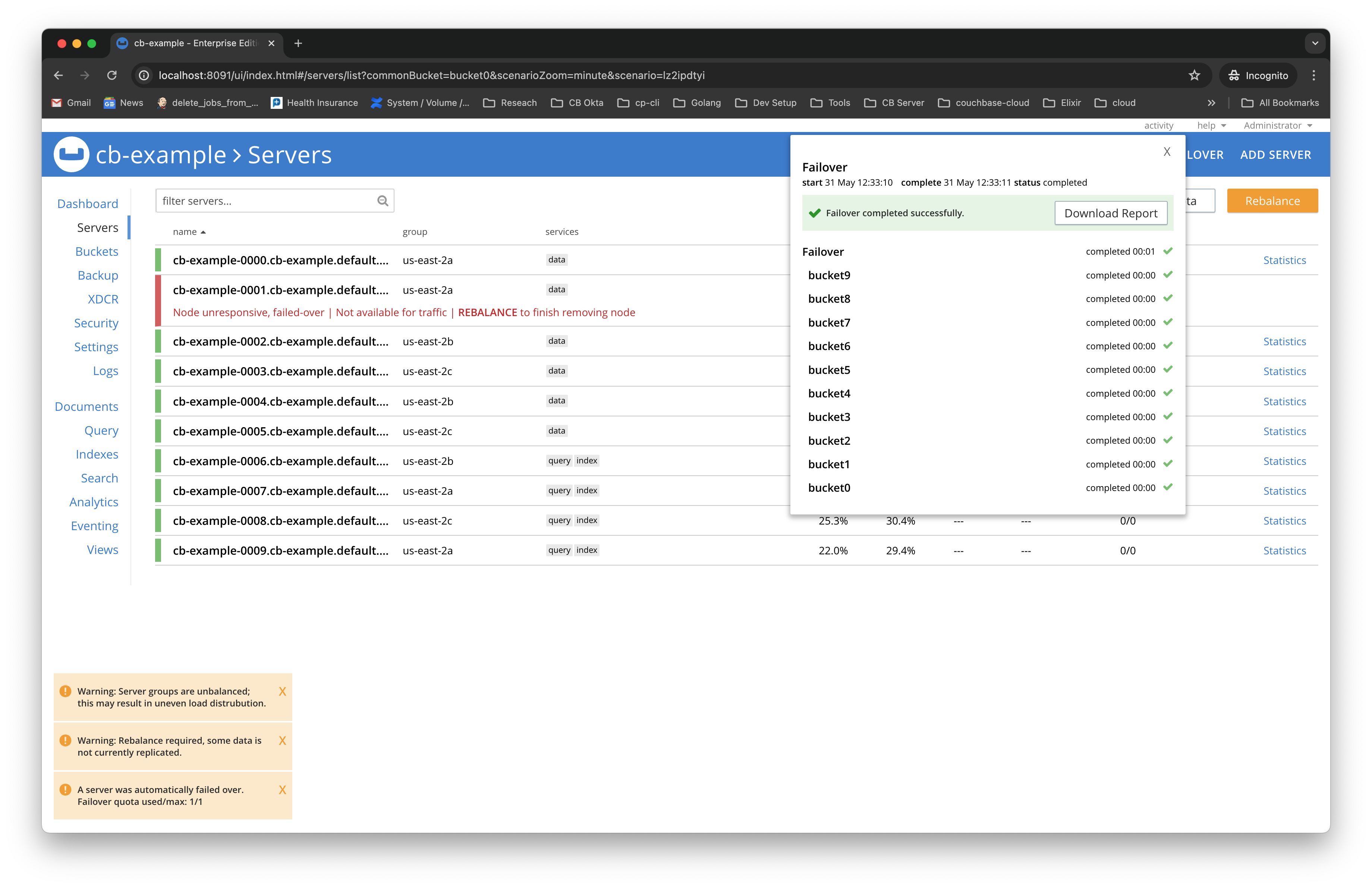

Cluster finally become stable and started rebalance :-

Again 0001 got failover :-

K8S event recored for 0001

Events:

|

Type Reason Age From Message

|

---- ------ ---- ---- -------

|

Normal Scheduled 6m42s default-scheduler Successfully assigned default/cb-example-0001 to ip-10-0-4-48.us-east-2.compute.internal

|

Warning FailedAttachVolume 6m42s attachdetach-controller Multi-Attach error for volume "pvc-ebb69b48-b348-4eaa-b276-dd4d886e0013" Volume is already exclusively attached to one node and can't be attached to another

|

Warning FailedAttachVolume 6m42s attachdetach-controller Multi-Attach error for volume "pvc-b54d0d75-197e-4356-bfbe-b93435fea334" Volume is already exclusively attached to one node and can't be attached to another

|

Warning FailedMount 6m41s kubelet MountVolume.SetUp failed for volume "couchbase-server-tls-ca" : failed to sync secret cache: timed out waiting for the condition

|

Warning FailedMount 6m41s kubelet MountVolume.SetUp failed for volume "kube-api-access-5zpqv" : failed to sync configmap cache: timed out waiting for the condition

|

Warning FailedMount 4m39s kubelet Unable to attach or mount volumes: unmounted volumes=[cb-example-0001-data-00 cb-example-0001-default-00], unattached volumes=[kube-api-access-5zpqv cb-example-0001-data-00 couchbase-server-tls-ca fluent-bit-config podinfo cb-example-0001-default-00]: timed out waiting for the condition

|

Warning FailedMount 2m23s kubelet Unable to attach or mount volumes: unmounted volumes=[cb-example-0001-default-00 cb-example-0001-data-00], unattached volumes=[fluent-bit-config podinfo cb-example-0001-default-00 kube-api-access-5zpqv cb-example-0001-data-00 couchbase-server-tls-ca]: timed out waiting for the condition

|

Normal SuccessfulAttachVolume 39s attachdetach-controller AttachVolume.Attach succeeded for volume "pvc-ebb69b48-b348-4eaa-b276-dd4d886e0013"

|

Normal SuccessfulAttachVolume 39s attachdetach-controller AttachVolume.Attach succeeded for volume "pvc-b54d0d75-197e-4356-bfbe-b93435fea334"

|

Normal Pulling 38s kubelet Pulling image "couchbase/server:7.2.5"

|

Normal Pulled 22s kubelet Successfully pulled image "couchbase/server:7.2.5" in 15.643231347s (15.643244002s including waiting)

|

Normal Created 22s kubelet Created container couchbase-server-init

|

Normal Started 22s kubelet Started container couchbase-server-init

|

Normal Pulled 12s kubelet Container image "couchbase/server:7.2.5" already present on machine

|

Normal Created 12s kubelet Created container couchbase-server

|

Normal Started 12s kubelet Started container couchbase-server

|

Normal Pulling 12s kubelet Pulling image "couchbase/fluent-bit:1.2.3"

|

Normal Pulled 10s kubelet Successfully pulled image "couchbase/fluent-bit:1.2.3" in 1.66162297s (1.661636639s including waiting)

|

Normal Created 10s kubelet Created container logging

|

Normal Started 10s kubelet Started container logging

|

Normal Pulling 10s kubelet Pulling image "busybox:1.33.1"

|

Normal Pulled 10s kubelet Successfully pulled image "busybox:1.33.1" in 666.151691ms (666.165338ms including waiting)

|

Normal Created 10s kubelet Created container audit-cleanup

|

Normal Started 10s kubelet Started container audit-cleanup

|

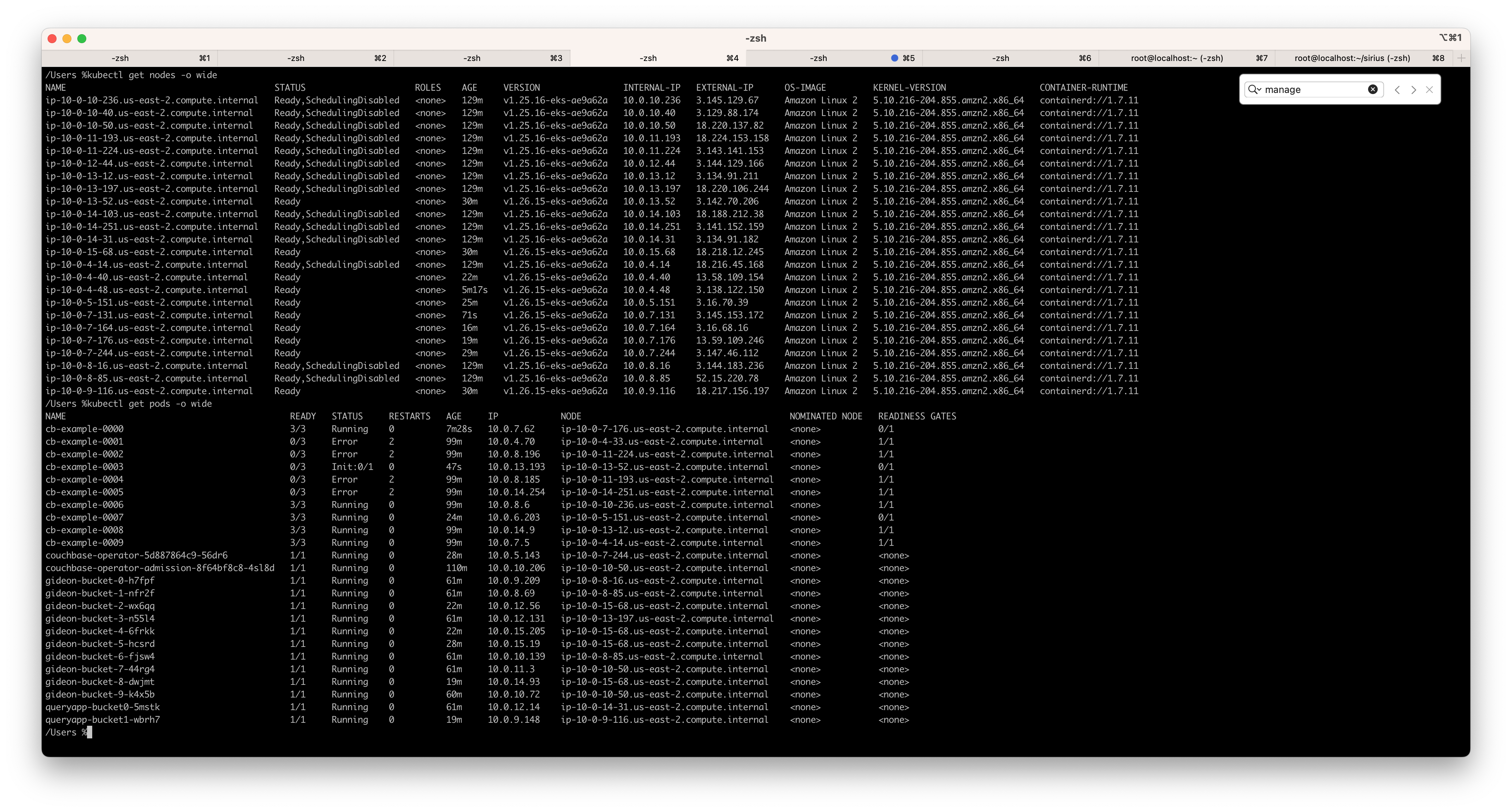

Node and pod information after upgrade :-

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

|

ip-10-0-10-236.us-east-2.compute.internal Ready <none> 170m v1.25.16-eks-ae9a62a 10.0.10.236 3.145.129.67 Amazon Linux 2 5.10.216-204.855.amzn2.x86_64 containerd://1.7.11

|

ip-10-0-10-40.us-east-2.compute.internal Ready <none> 170m v1.25.16-eks-ae9a62a 10.0.10.40 3.129.88.174 Amazon Linux 2 5.10.216-204.855.amzn2.x86_64 containerd://1.7.11

|

ip-10-0-10-50.us-east-2.compute.internal Ready <none> 170m v1.25.16-eks-ae9a62a 10.0.10.50 18.220.137.82 Amazon Linux 2 5.10.216-204.855.amzn2.x86_64 containerd://1.7.11

|

ip-10-0-11-193.us-east-2.compute.internal Ready <none> 170m v1.25.16-eks-ae9a62a 10.0.11.193 18.224.153.158 Amazon Linux 2 5.10.216-204.855.amzn2.x86_64 containerd://1.7.11

|

ip-10-0-11-224.us-east-2.compute.internal Ready <none> 170m v1.25.16-eks-ae9a62a 10.0.11.224 3.143.141.153 Amazon Linux 2 5.10.216-204.855.amzn2.x86_64 containerd://1.7.11

|

ip-10-0-12-44.us-east-2.compute.internal Ready <none> 170m v1.25.16-eks-ae9a62a 10.0.12.44 3.144.129.166 Amazon Linux 2 5.10.216-204.855.amzn2.x86_64 containerd://1.7.11

|

ip-10-0-13-12.us-east-2.compute.internal Ready <none> 170m v1.25.16-eks-ae9a62a 10.0.13.12 3.134.91.211 Amazon Linux 2 5.10.216-204.855.amzn2.x86_64 containerd://1.7.11

|

ip-10-0-13-197.us-east-2.compute.internal Ready <none> 170m v1.25.16-eks-ae9a62a 10.0.13.197 18.220.106.244 Amazon Linux 2 5.10.216-204.855.amzn2.x86_64 containerd://1.7.11

|

ip-10-0-13-52.us-east-2.compute.internal Ready <none> 71m v1.26.15-eks-ae9a62a 10.0.13.52 3.142.70.206 Amazon Linux 2 5.10.216-204.855.amzn2.x86_64 containerd://1.7.11

|

ip-10-0-14-103.us-east-2.compute.internal Ready <none> 170m v1.25.16-eks-ae9a62a 10.0.14.103 18.188.212.38 Amazon Linux 2 5.10.216-204.855.amzn2.x86_64 containerd://1.7.11

|

ip-10-0-14-251.us-east-2.compute.internal Ready <none> 170m v1.25.16-eks-ae9a62a 10.0.14.251 3.141.152.159 Amazon Linux 2 5.10.216-204.855.amzn2.x86_64 containerd://1.7.11

|

ip-10-0-14-31.us-east-2.compute.internal Ready <none> 170m v1.25.16-eks-ae9a62a 10.0.14.31 3.134.91.182 Amazon Linux 2 5.10.216-204.855.amzn2.x86_64 containerd://1.7.11

|

ip-10-0-15-68.us-east-2.compute.internal Ready <none> 71m v1.26.15-eks-ae9a62a 10.0.15.68 18.218.12.245 Amazon Linux 2 5.10.216-204.855.amzn2.x86_64 containerd://1.7.11

|

ip-10-0-4-14.us-east-2.compute.internal Ready <none> 170m v1.25.16-eks-ae9a62a 10.0.4.14 18.216.45.168 Amazon Linux 2 5.10.216-204.855.amzn2.x86_64 containerd://1.7.11

|

ip-10-0-4-40.us-east-2.compute.internal Ready <none> 63m v1.26.15-eks-ae9a62a 10.0.4.40 13.58.109.154 Amazon Linux 2 5.10.216-204.855.amzn2.x86_64 containerd://1.7.11

|

ip-10-0-4-48.us-east-2.compute.internal Ready <none> 46m v1.26.15-eks-ae9a62a 10.0.4.48 3.138.122.150 Amazon Linux 2 5.10.216-204.855.amzn2.x86_64 containerd://1.7.11

|

ip-10-0-5-151.us-east-2.compute.internal Ready <none> 66m v1.26.15-eks-ae9a62a 10.0.5.151 3.16.70.39 Amazon Linux 2 5.10.216-204.855.amzn2.x86_64 containerd://1.7.11

|

ip-10-0-7-164.us-east-2.compute.internal Ready <none> 57m v1.26.15-eks-ae9a62a 10.0.7.164 3.16.68.16 Amazon Linux 2 5.10.216-204.855.amzn2.x86_64 containerd://1.7.11

|

ip-10-0-7-176.us-east-2.compute.internal Ready <none> 60m v1.26.15-eks-ae9a62a 10.0.7.176 13.59.109.246 Amazon Linux 2 5.10.216-204.855.amzn2.x86_64 containerd://1.7.11

|

ip-10-0-7-244.us-east-2.compute.internal Ready <none> 70m v1.26.15-eks-ae9a62a 10.0.7.244 3.147.46.112 Amazon Linux 2 5.10.216-204.855.amzn2.x86_64 containerd://1.7.11

|

ip-10-0-8-16.us-east-2.compute.internal Ready <none> 170m v1.25.16-eks-ae9a62a 10.0.8.16 3.144.183.236 Amazon Linux 2 5.10.216-204.855.amzn2.x86_64 containerd://1.7.11

|

ip-10-0-8-85.us-east-2.compute.internal Ready <none> 170m v1.25.16-eks-ae9a62a 10.0.8.85 52.15.220.78 Amazon Linux 2 5.10.216-204.855.amzn2.x86_64 containerd://1.7.11

|

ip-10-0-9-116.us-east-2.compute.internal Ready,SchedulingDisabled <none> 71m v1.26.15-eks-ae9a62a 10.0.9.116 18.217.156.197 Amazon Linux 2 5.10.216-204.855.amzn2.x86_64 containerd://1.7.11

|

/Users %kubectl get pods -o wide

|

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

|

cb-example-0000 3/3 Running 0 48m 10.0.7.62 ip-10-0-7-176.us-east-2.compute.internal <none> 1/1

|

cb-example-0001 3/3 Running 0 10m 10.0.6.130 ip-10-0-4-48.us-east-2.compute.internal <none> 0/1

|

cb-example-0002 3/3 Running 0 29m 10.0.8.185 ip-10-0-11-193.us-east-2.compute.internal <none> 1/1

|

cb-example-0003 3/3 Running 0 41m 10.0.13.193 ip-10-0-13-52.us-east-2.compute.internal <none> 1/1

|

cb-example-0004 3/3 Running 0 40m 10.0.9.131 ip-10-0-9-116.us-east-2.compute.internal <none> 1/1

|

cb-example-0005 3/3 Running 0 20m 10.0.12.79 ip-10-0-14-251.us-east-2.compute.internal <none> 1/1

|

cb-example-0006 3/3 Running 0 140m 10.0.8.6 ip-10-0-10-236.us-east-2.compute.internal <none> 1/1

|

cb-example-0007 3/3 Running 0 65m 10.0.6.203 ip-10-0-5-151.us-east-2.compute.internal <none> 1/1

|

cb-example-0008 3/3 Running 0 140m 10.0.14.9 ip-10-0-13-12.us-east-2.compute.internal <none> 1/1

|

cb-example-0009 3/3 Running 0 140m 10.0.7.5 ip-10-0-4-14.us-east-2.compute.internal <none> 1/1

|

couchbase-operator-5d887864c9-56dr6 1/1 Running 0 69m 10.0.5.143 ip-10-0-7-244.us-east-2.compute.internal <none> <none>

|

couchbase-operator-admission-8f64bf8c8-4sl8d 1/1 Running 0 151m 10.0.10.206 ip-10-0-10-50.us-east-2.compute.internal <none> <none>

|

gideon-bucket-0-h7fpf 1/1 Running 0 102m 10.0.9.209 ip-10-0-8-16.us-east-2.compute.internal <none> <none>

|

gideon-bucket-1-nfr2f 1/1 Running 0 102m 10.0.8.69 ip-10-0-8-85.us-east-2.compute.internal <none> <none>

|

gideon-bucket-2-wx6qq 1/1 Running 0 63m 10.0.12.56 ip-10-0-15-68.us-east-2.compute.internal <none> <none>

|

gideon-bucket-3-n55l4 1/1 Running 0 102m 10.0.12.131 ip-10-0-13-197.us-east-2.compute.internal <none> <none>

|

gideon-bucket-4-6frkk 1/1 Running 0 63m 10.0.15.205 ip-10-0-15-68.us-east-2.compute.internal <none> <none>

|

gideon-bucket-5-hcsrd 1/1 Running 0 69m 10.0.15.19 ip-10-0-15-68.us-east-2.compute.internal <none> <none>

|

gideon-bucket-6-fjsw4 1/1 Running 0 102m 10.0.10.139 ip-10-0-8-85.us-east-2.compute.internal <none> <none>

|

gideon-bucket-7-44rg4 1/1 Running 0 102m 10.0.11.3 ip-10-0-10-50.us-east-2.compute.internal <none> <none>

|

gideon-bucket-8-dwjmt 1/1 Running 0 60m 10.0.14.93 ip-10-0-15-68.us-east-2.compute.internal <none> <none>

|

gideon-bucket-9-k4x5b 1/1 Running 0 101m 10.0.10.72 ip-10-0-10-50.us-east-2.compute.internal <none> <none>

|

queryapp-bucket0-5mstk 1/1 Running 0 102m 10.0.12.14 ip-10-0-14-31.us-east-2.compute.internal <none> <none>

|

queryapp-bucket1-48wm9 1/1 Running 0 6m52s 10.0.10.25 ip-10-0-11-224.us-east-2.compute.internal <none> <none>

|

CB logs after cluster became stable - http://supportal.couchbase.com/snapshot/452c70ce7262e813b23c47486d959ce0::0

Logs were successfully uploaded to the following URLs:

https://cb-engineering.s3.amazonaws.com/eks_worker_node_upgrade_failed/collectinfo-2024-05-31T124110-ns_1%40cb-example-0000.cb-example.default.svc.zip

https://cb-engineering.s3.amazonaws.com/eks_worker_node_upgrade_failed/collectinfo-2024-05-31T124110-ns_1%40cb-example-0001.cb-example.default.svc.zip

https://cb-engineering.s3.amazonaws.com/eks_worker_node_upgrade_failed/collectinfo-2024-05-31T124110-ns_1%40cb-example-0002.cb-example.default.svc.zip

https://cb-engineering.s3.amazonaws.com/eks_worker_node_upgrade_failed/collectinfo-2024-05-31T124110-ns_1%40cb-example-0003.cb-example.default.svc.zip

https://cb-engineering.s3.amazonaws.com/eks_worker_node_upgrade_failed/collectinfo-2024-05-31T124110-ns_1%40cb-example-0004.cb-example.default.svc.zip

https://cb-engineering.s3.amazonaws.com/eks_worker_node_upgrade_failed/collectinfo-2024-05-31T124110-ns_1%40cb-example-0005.cb-example.default.svc.zip

https://cb-engineering.s3.amazonaws.com/eks_worker_node_upgrade_failed/collectinfo-2024-05-31T124110-ns_1%40cb-example-0006.cb-example.default.svc.zip

https://cb-engineering.s3.amazonaws.com/eks_worker_node_upgrade_failed/collectinfo-2024-05-31T124110-ns_1%40cb-example-0007.cb-example.default.svc.zip

https://cb-engineering.s3.amazonaws.com/eks_worker_node_upgrade_failed/collectinfo-2024-05-31T124110-ns_1%40cb-example-0008.cb-example.default.svc.zip

https://cb-engineering.s3.amazonaws.com/eks_worker_node_upgrade_failed/collectinfo-2024-05-31T124110-ns_1%40cb-example-0009.cb-example.default.svc.zip

operator logs after cluster became stable - cbopinfo-20240531T181723+0530.tar.gz![]()

Again 0004 auto-failover:-

K8S event:-

Events:

|

Type Reason Age From Message

|

---- ------ ---- ---- -------

|

Normal Scheduled 3m38s default-scheduler Successfully assigned default/cb-example-0004 to ip-10-0-8-16.us-east-2.compute.internal

|

Warning FailedAttachVolume 3m38s attachdetach-controller Multi-Attach error for volume "pvc-d4832dfe-61e2-4c61-bfaa-249101b54d67" Volume is already exclusively attached to one node and can't be attached to another

|

Warning FailedAttachVolume 3m38s attachdetach-controller Multi-Attach error for volume "pvc-6174aeea-1a1a-4da7-b63a-c8d07d8e0431" Volume is already exclusively attached to one node and can't be attached to another

|

Warning FailedMount 95s kubelet Unable to attach or mount volumes: unmounted volumes=[cb-example-0004-default-00 cb-example-0004-data-00], unattached volumes=[couchbase-server-tls-ca fluent-bit-config podinfo cb-example-0004-default-00 kube-api-access-ncvkd cb-example-0004-data-00]: timed out waiting for the condition

|

operator logs after 0004 recovered:- logs.txt![]()

Collecting logs 8 hours later:-

CB logs - http://supportal.couchbase.com/snapshot/452c70ce7262e813b23c47486d959ce0::1

Operator logs - cbopinfo-20240601T060229+0530.tar.gz![]()

EKS worker node upgrade failed from 1.25 to 1.26