Details

-

Task

-

Resolution: Won't Fix

-

Major

-

4.1.1

-

None

-

Ubuntu, 64-bit, 3 nodes 4CPU x 4GB RAM

Description

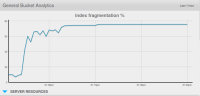

There is a problem with handling expired documents by GSI that causes index fragmentation (up to 98%) and results with enormous grow of used disk space ([index data size]:[index disk size] =~ [1]:[1/(1-fragmentation rate)]). Disk space usage returns to normal only after CB-server restart.

How to reproduce:

1. 4.1.1-EE GA (5914), 3 nodes (4 CPU x 4 GB RAM)

2. All nodes have all services enabled

3. Establish a cluster with default bucket (256Mb, full eviction, no password, 1 replica, view index replicas, i/o proirity = high, flush = enable)

4. Run the following code (or write your own: 20 threads make sync-api-insertion of 100.000 docs per thread with indexed fields; need to create indexes too):

package highcpuafterload;

|

|

|

import com.couchbase.client.java.Bucket;

|

import com.couchbase.client.java.Cluster;

|

import com.couchbase.client.java.CouchbaseCluster;

|

import com.couchbase.client.java.document.JsonDocument;

|

import com.couchbase.client.java.document.json.JsonObject;

|

import com.couchbase.client.java.env.CouchbaseEnvironment;

|

import com.couchbase.client.java.env.DefaultCouchbaseEnvironment;

|

import com.couchbase.client.java.query.N1qlQuery;

|

import java.util.LinkedList;

|

import java.util.concurrent.Phaser;

|

|

|

public class BombardaMaxima extends Thread {

|

|

|

private final int tid;

|

|

// configure here

|

private static final int threads = 20;

|

private static final int docsPerThread = 100000;

|

private static final int docTTLms = 30 * 1000;

|

private static final int dumpToConsoleEachNDocs = 1000;

|

|

private static final Phaser phaser = new Phaser(threads + 1);

|

private static final CouchbaseEnvironment ce;

|

private static final Cluster cluster;

|

private static final String bucket = "default";

|

|

static {

|

ce = DefaultCouchbaseEnvironment.create();

|

final LinkedList<String> nodes = new LinkedList();

|

nodes.add("A.node");

|

nodes.add("B.node");

|

nodes.add("C.node");

|

cluster = CouchbaseCluster.create(ce, nodes);

|

final Bucket b = cluster.openBucket(bucket);

|

|

|

|

final String iQA = "CREATE INDEX iQA ON `default`(a, b) WHERE a is valued USING GSI";

|

final String iQX = "CREATE INDEX iQX ON `default`(a, c) WHERE a is valued USING GSI";

|

|

b.query(N1qlQuery.simple(iQA));

|

b.query(N1qlQuery.simple(iQX));

|

|

}

|

public BombardaMaxima(final int tid) {

|

this.tid = tid;

|

}

|

public final void run() {

|

try {

|

Bucket b = null;

|

synchronized(cluster) { b = cluster.openBucket(bucket); }

|

final long stm = System.currentTimeMillis();

|

final JsonObject jo = JsonObject

|

.empty()

|

.put("a", stm)

|

.put("b", stm)

|

.put("c", stm);

|

for(int i = 0; i< docsPerThread; i++) {

|

b.upsert(JsonDocument.create(

|

tid + ":" + System.currentTimeMillis(),

|

(int)((System.currentTimeMillis() + docTTLms) / 1000),

|

jo)

|

);

|

if (i % dumpToConsoleEachNDocs == 0) System.out.println("T[" + tid + "] = " + i);

|

}

|

} catch(final Exception e) {

|

e.printStackTrace();

|

} finally {

|

phaser.arriveAndAwaitAdvance();

|

}

|

}

|

public static void main(String[] args) {

|

for(int i = 0; i< threads; i++) new BombardaMaxima(i).start();

|

phaser.arriveAndAwaitAdvance();

|

System.out.println("DONE");

|

}

|

|

}

|

5. Watch growning indexes defragmentation rate via UI stats.

6. Wait for code run to end.

7. Wait a little bit more (you can also force compaction) until all documents in bucket are expired (you should not press "Documents" because of https://issues.couchbase.com/browse/MB-19758)

8. Now, finally, goto UI stats. You will see big fragmentation rate, and [index data size]:[index disk size] =~ [1]:[1/(1-fragmentation rate)]

My examples of final results for this run (see images below)

Related forum thread: https://forums.couchbase.com/t/index-data-size-index-disk-size-1-50-is-it-normal/8467/2