Details

-

Bug

-

Resolution: Duplicate

-

Critical

-

6.5.0

-

Enterprise Edition 6.5.0 build 3748

-

Untriaged

-

Centos 64-bit

-

-

No

Description

Build: 6.5.0 build 3748

Scenario:

- 4 node cluster, Couchbase bucket (replicas=3)

- Loaded 10 docs using durability=MAJORITY

- Stopped Memcached on node-2 and node-4 using `kill -STOP pid`

- Loaded few docs to verify durability feature

- Resumed Memcached on both the nodes using `kill -CONT pid`

- Validated the doc_loading part using Cbstats

Observation:

After resuming Cbstats on the targeted nodes works fine. But got the following Memcached error in the UI followed by the compactor error. Also node-2 is in warmup stage after this.

Control connection to memcached on 'ns_1@10.112.191.102' disconnected: {{badmatch, {error, timeout}},

|

[{mc_client_binary, cmd_vocal_recv, 5,

|

[{file, "src/mc_client_binary.erl"}, {line, 152}]},

|

{mc_client_binary, select_bucket, 2,

|

[{file, "src/mc_client_binary.erl"}, {line, 328}]},

|

{ns_memcached, do_ensure_bucket, 3,

|

[{file, "src/ns_memcached.erl"}, {line, 1117}]},

|

{ns_memcached, ensure_bucket, 2,

|

[{file, "src/ns_memcached.erl"}, {line, 1103}]},

|

{ns_memcached, '-run_check_config/2-fun-0-', 2,

|

[{file, "src/ns_memcached.erl"}, {line, 772}]},

|

{ns_memcached, '-perform_very_long_call/3-fun-0-', 2,

|

[{file, "src/ns_memcached.erl"}, {line, 318}]},

|

{ns_memcached_sockets_pool, '-executing_on_socket/3-fun-0-', 3,

|

[{file, "src/ns_memcached_sockets_pool.erl"}, {line, 73}]},

|

{async, '-async_init/4-fun-2-', 3,

|

[{file, "src/async.erl"}, {line, 211}]}]}

|

|

|

Followed by compactor error,

|

Compactor for database `default` (pid [{type,database},

|

{important,true},

|

{name,<<"default">>},

|

{fa,

|

{#Fun<compaction_daemon.4.73660631>,

|

[<<"default">>,

|

{config,

|

{30,undefined},

|

{30,undefined},

|

undefined,false,false,

|

{daemon_config,30,131072,20971520}},

|

false,

|

{[{type,bucket}]}]}}]) terminated unexpectedly: {{{badmatch,

|

{error, timeout}},

|

[{mc_client_binary, cmd_vocal_recv, 5,

|

[{file, "src/mc_client_binary.erl"}, {line, 152}]},

|

{mc_client_binary, select_bucket, 2,

|

[{file, "src/mc_client_binary.erl"}, {line, 328}]},

|

{ns_memcached, do_ensure_bucket, 3,

|

[{file, "src/ns_memcached.erl"}, {line, 1117}]},

|

{ns_memcached, ensure_bucket, 2,

|

[{file, "src/ns_memcached.erl"}, {line, 1103}]},

|

{ns_memcached, '-run_check_config/2-fun-0-', 2,

|

[{file, "src/ns_memcached.erl"}, {line, 772}]},

|

{ns_memcached, '-perform_very_long_call/3-fun-0-', 2,

|

[{file, "src/ns_memcached.erl"}, {line, 318}]},

|

{ns_memcached_sockets_pool, '-executing_on_socket/3-fun-0-', 3,

|

[{file, "src/ns_memcached_sockets_pool.erl"}, {line, 73}]},

|

{async, '-async_init/4-fun-2-', 3,

|

[{file, "src/async.erl"}, {line, 211}]}]},

|

{gen_server, call,

|

[{'ns_memcached-default', 'ns_1@10.112.191.102'},

|

{raw_stats, <<"diskinfo">>,

|

#Fun<compaction_daemon.18.73660631>,

|

{<<"0">>, <<"0">>}}, 180000]}}

|

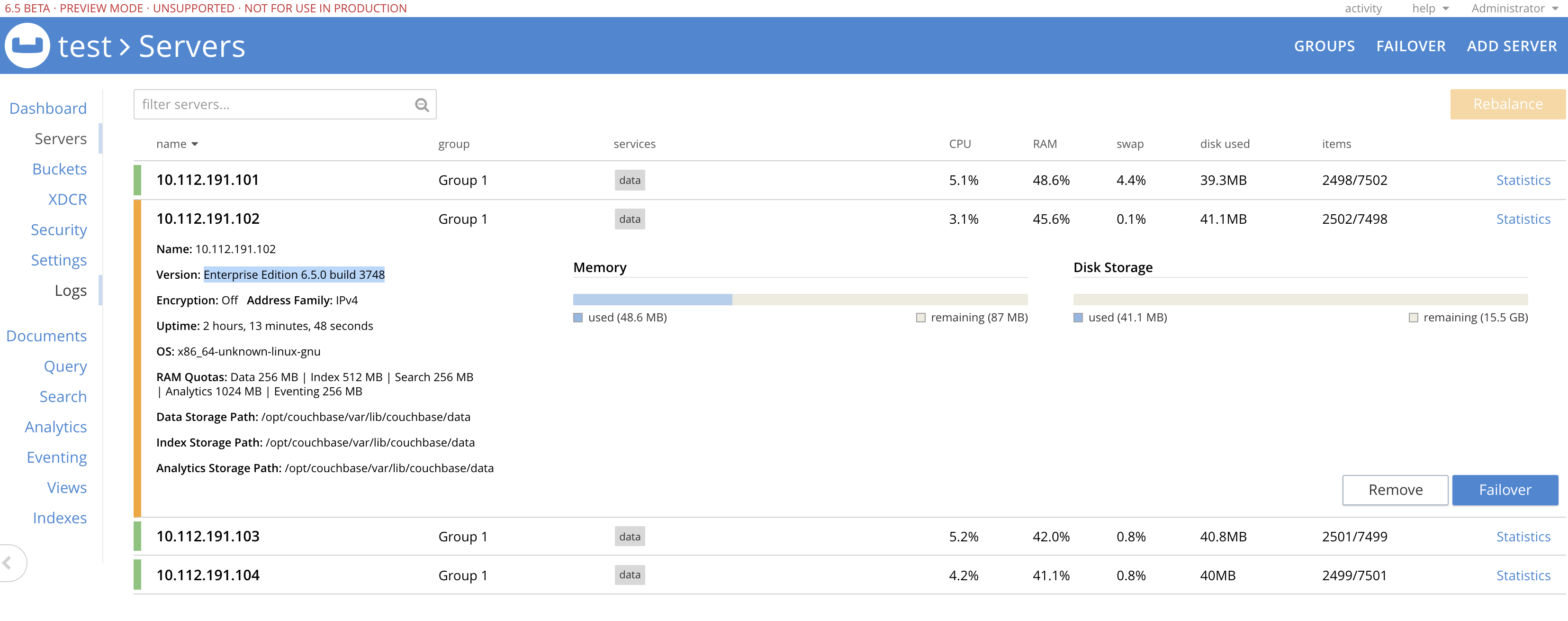

Cluster state with the above error:

TAF testcase:

epengine.durability_failures.TimeoutTests.test_timeout_with_crud_failures,nodes_init=4,replicas=3,num_items=10000,sdk_timeout=60,simulate_error=stop_memcached,durability=MAJORITY

|