Details

-

Bug

-

Resolution: Fixed

-

Critical

-

6.5.0

-

Enterprise Edition 6.5.0 build 3748

-

Untriaged

-

Centos 64-bit

-

No

-

KV-Engine Mad-Hatter Beta

Description

Build: 6.5.0-3748

Scenario:

- 4 node cluster, Couchbase bucket (replicas=3)

- Initially load 10 docs with durability=MAJORITY (Succeeds)

- Started loading 10K docs in background

- Kill Memcached on node-4 (10.112.191.104), when SyncWrite creates are happening in parallel

Observation:

Memcached on node-4 recovers, but still all the docs ops fails with `com.couchbase.client.core.error.RequestTimeoutException` exception.

Once the step-3 is completed with errors, trying to retry the fails docs one-by-one using the separate SDK client with sdk_timeout=5 seconds (Same as step-2).

Seeing `com.couchbase.client.core.error.DurabilityAmbiguousException` for all individual operation, but the doc_creation succeeds. After this memcached on replica nodes (node-2, node-3) crashes at the same time with exit code #139 with back-trace given below. node-1 crashes 16s later (05:19:43.384)

Expected behavior:

As soon as the node-4 restarts, all sync_writes should go through fine without any timeouts / ambiguous exceptions. No Memcached crash is expected when writing to replica nodes.

2019-07-13T05:19:27.211-07:00, ns_log:0:info:message(ns_1@10.112.191.103) - Service 'memcached' exited with status 139. Restarting. Messages:

|

2019-07-13T05:19:27.183050-07:00 CRITICAL /opt/couchbase/bin/memcached() [0x400000+0x8f300]

|

2019-07-13T05:19:27.183054-07:00 CRITICAL /opt/couchbase/bin/memcached() [0x400000+0x8f3ab]

|

2019-07-13T05:19:27.183059-07:00 CRITICAL /opt/couchbase/bin/memcached() [0x400000+0x9bf4c]

|

2019-07-13T05:19:27.183065-07:00 CRITICAL /opt/couchbase/bin/memcached() [0x400000+0x30557]

|

2019-07-13T05:19:27.183068-07:00 CRITICAL /opt/couchbase/bin/../lib/libevent_core.so.2.1.8() [0x7fabf4202000+0x17107]

|

2019-07-13T05:19:27.183071-07:00 CRITICAL /opt/couchbase/bin/../lib/libevent_core.so.2.1.8(event_base_loop+0x39f) [0x7fabf4202000+0x1767f]

|

2019-07-13T05:19:27.183077-07:00 CRITICAL /opt/couchbase/bin/memcached() [0x400000+0x701a9]

|

2019-07-13T05:19:27.183079-07:00 CRITICAL /opt/couchbase/bin/../lib/libplatform_so.so.0.1.0() [0x7fabf4f23000+0x8f27]

|

2019-07-13T05:19:27.183083-07:00 CRITICAL /lib64/libpthread.so.0() [0x7fabf2953000+0x7df3]

|

2019-07-13T05:19:27.183107-07:00 CRITICAL /lib64/libc.so.6(clone+0x6d) [0x7fabf2592000+0xf61ad]

|

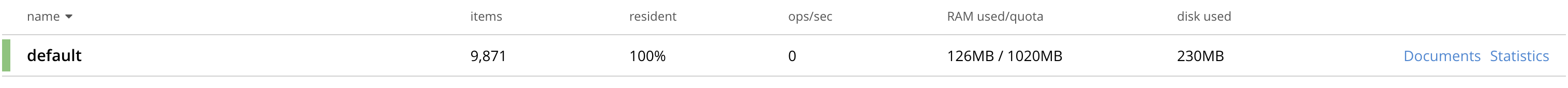

Bucket state after the test execution:

TAF Testcase:

crash_test.crash_process.CrashTest:

|

test_crash_process,nodes_init=4,replicas=3,num_items=10,process=memcached,service=memcached,sig_type=sigkill,target_node=active,durability=MAJORITY

|