Details

-

Bug

-

Resolution: Fixed

-

Critical

-

6.5.0

-

Untriaged

-

Centos 64-bit

-

Yes

-

KV-Engine Mad-Hatter GA

Description

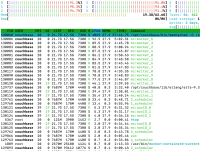

There is ~15% TP decrease in "Avg Throughput (ops/sec), Workload A, 3 nodes, 12 vCPU, replicateTo=1". This regression comes in build 4723: http://172.23.123.43:8000/getchangelog?product=couchbase-server&fromb=6.5.0-4722&tob=6.5.0-4723

4722:

http://perf.jenkins.couchbase.com/job/hebe/4973/ - 118370

https://s3-us-west-2.amazonaws.com/perf-artifacts/jenkins-hebe-4973/172.23.100.190.zip

https://s3-us-west-2.amazonaws.com/perf-artifacts/jenkins-hebe-4973/172.23.100.191.zip

https://s3-us-west-2.amazonaws.com/perf-artifacts/jenkins-hebe-4973/172.23.100.192.zip

https://s3-us-west-2.amazonaws.com/perf-artifacts/jenkins-hebe-4973/172.23.100.193.zip

https://s3-us-west-2.amazonaws.com/perf-artifacts/jenkins-hebe-4973/172.23.100.204.zip

https://s3-us-west-2.amazonaws.com/perf-artifacts/jenkins-hebe-4973/172.23.100.205.zip

https://s3-us-west-2.amazonaws.com/perf-artifacts/jenkins-hebe-4973/172.23.100.206.zip

https://s3-us-west-2.amazonaws.com/perf-artifacts/jenkins-hebe-4973/172.23.100.207.zip

http://cbmonitor.sc.couchbase.com/reports/html/?snapshot=hebe_650-4722_access_5f2e

4723:

http://perf.jenkins.couchbase.com/job/hebe/4977/ - 102525

https://s3-us-west-2.amazonaws.com/perf-artifacts/jenkins-hebe-4977/172.23.100.190.zip

https://s3-us-west-2.amazonaws.com/perf-artifacts/jenkins-hebe-4977/172.23.100.191.zip

https://s3-us-west-2.amazonaws.com/perf-artifacts/jenkins-hebe-4977/172.23.100.192.zip

https://s3-us-west-2.amazonaws.com/perf-artifacts/jenkins-hebe-4977/172.23.100.193.zip

https://s3-us-west-2.amazonaws.com/perf-artifacts/jenkins-hebe-4977/172.23.100.204.zip

https://s3-us-west-2.amazonaws.com/perf-artifacts/jenkins-hebe-4977/172.23.100.205.zip

https://s3-us-west-2.amazonaws.com/perf-artifacts/jenkins-hebe-4977/172.23.100.206.zip

https://s3-us-west-2.amazonaws.com/perf-artifacts/jenkins-hebe-4977/172.23.100.207.zip

http://cbmonitor.sc.couchbase.com/reports/html/?snapshot=hebe_650-4723_access_0f9c

Comparison: