Details

-

Bug

-

Resolution: Fixed

-

Critical

-

3.1.6, 4.1.2, 4.5.1, 4.6.5, 5.0.1, 5.1.3, 5.5.5, 6.0.2, 6.0.3

-

Triaged

-

Yes

Description

Backport of MB-36424 to 6.0.4

Summary

When couchstore writes out modified node elements during document saves, it it supposed to limit the number of bytes written in a single node to a _chunk_threshold - by default 1279 bytes. If the node is larger than the limit it should be split into multiple sibling nodes.

However, this limit is not respected, resulting in overly-large nodes being written out. In the case of the by-seqno B-Tree (which always writes values to the rightmost leaf as seqnos are increasing), it results in all leaf elements residing in a single leaf node. Moreover, this means that adding another element to the B-Tree effectively re-writes the entire tree, resulting in massive Write Amplification.

Steps to Reproduce

- Start 2-node cluster run (single replica):

./cluster_run --nodes=2 - Start a SyncWrite workload; single threaded client updating the same 100,000 items (each key 10 times) with level=persistMajority:

./engines/ep/management/sync_repl.py localhost:12000 Administrator asdasd default loop_bulk_setD key value 100000 1000000 3 - Observe the op/s and Write Amplification

Expected Results

- Op/s should be broadly constant (given both key and seqno B-Tree should have a constant number of items in them).

- Write amplification should also be broadly constant.

Actual Results

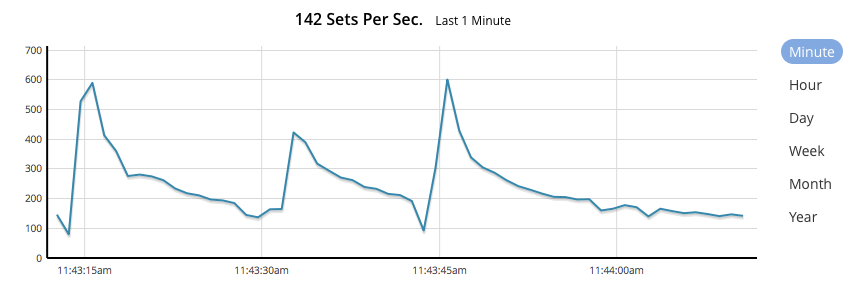

- The op/s quickly drops from a peak of ~600 down to 150:

- The Write Amplification increase (corresponding with the top in op/s) from 5.6x, up to 743x

.

. - Both op/s and Write Amplification temporarily recover when compaction occurs, but same pattern is observed over time: