Details

-

Bug

-

Resolution: Fixed

-

Major

-

Cheshire-Cat

Description

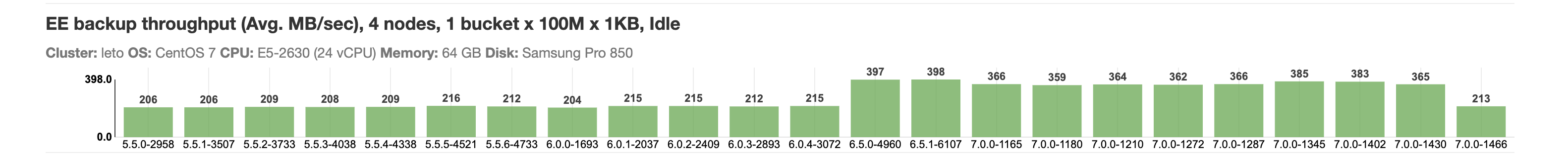

Observing ~40 drop in cbbackupmgr backup throughput in 7.0.0-1466 .

I am also seeing increase in time in below test case.

![]()

Still triaging further to identify the the build which introduced the degradation .