Details

-

Bug

-

Resolution: Incomplete

-

Major

-

Cheshire-Cat

-

Triaged

-

1

-

Unknown

Description

Job: http://perf.jenkins.couchbase.com/job/rhea-5node1/12/

Build: 7.0.0-2083

Logs:

https://s3.amazonaws.com/bugdb/jira/qe/collectinfo-2020-05-18T154200-ns_1%40172.23.97.21.zip

https://s3.amazonaws.com/bugdb/jira/qe/collectinfo-2020-05-18T154200-ns_1%40172.23.97.22.zip

https://s3.amazonaws.com/bugdb/jira/qe/collectinfo-2020-05-18T154200-ns_1%40172.23.97.23.zip

https://s3.amazonaws.com/bugdb/jira/qe/collectinfo-2020-05-18T154200-ns_1%40172.23.97.24.zip

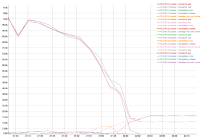

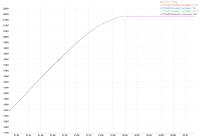

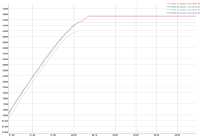

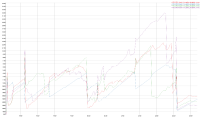

During the load phase of Magma cbc-pillowfight tests, ops per second dropped from 350K to 26K. Moreover, the number of items didn't increase after that.