Details

-

Bug

-

Resolution: Won't Fix

-

Major

-

None

-

6.6.1

-

Untriaged

-

1

-

Unknown

Description

Attachments

Issue Links

- relates to

-

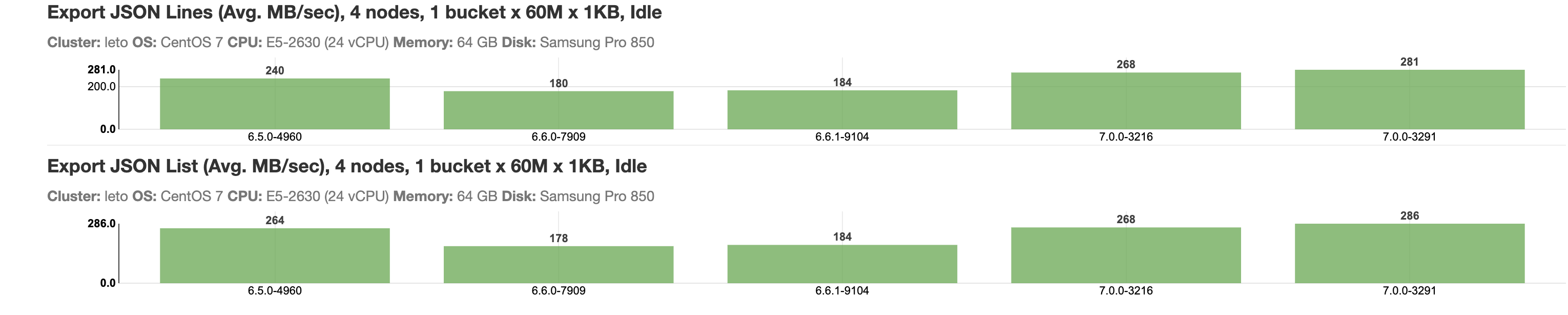

MB-38140 cbexport export throughput drop in CC build 7.0.0-1165 (or earlier)

-

- Closed

-