Details

Description

Build: 7.0.0-5017

Scenario:

Performing scheduled backup on muti node cluster with single bucket + other services running

Observation:

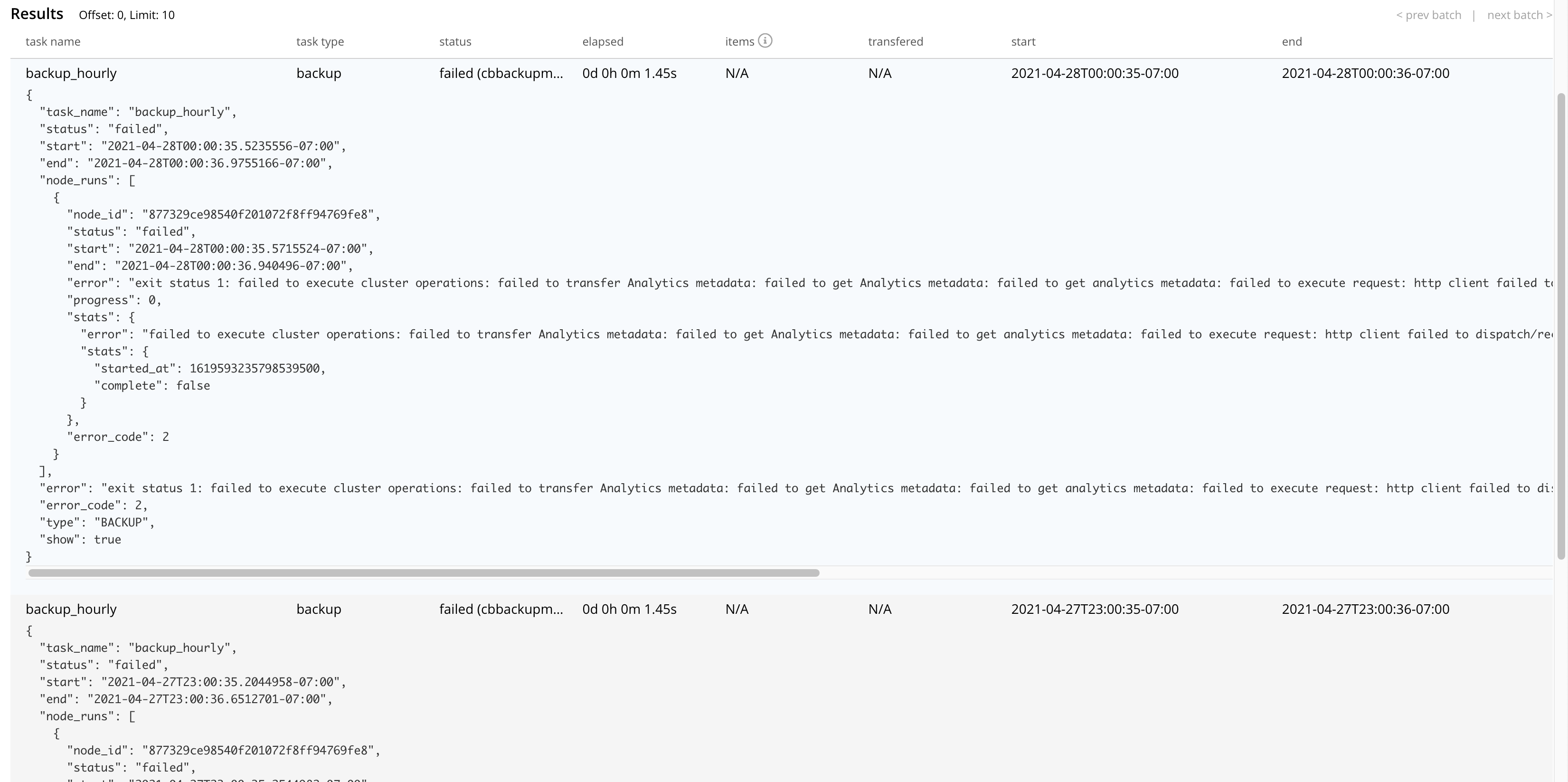

Seeing one of the scheduled backup failed with "exit status 1" with reason"

failed to get Analytics metadata

{

|

"task_name": "backup_hourly",

|

"status": "failed",

|

"start": "2021-04-27T23:00:35.2044958-07:00",

|

"end": "2021-04-27T23:00:36.6512701-07:00",

|

"node_runs": [

|

{

|

"node_id": "877329ce98540f201072f8ff94769fe8",

|

"status": "failed",

|

"start": "2021-04-27T23:00:35.2544982-07:00",

|

"end": "2021-04-27T23:00:36.6142778-07:00",

|

"error": "exit status 1: failed to execute cluster operations: failed to transfer Analytics metadata: failed to get Analytics metadata: failed to get analytics metadata: failed to execute request: http client failed to dispatch/receive request/response: Get \"http://172.23.138.127:8095/api/v1/backup\": dial tcp 172.23.138.127:8095: connectex: No connection could be made because the target machine actively refused it.",

|

"progress": 0,

|

"stats": {

|

"error": "failed to execute cluster operations: failed to transfer Analytics metadata: failed to get Analytics metadata: failed to get analytics metadata: failed to execute request: http client failed to dispatch/receive request/response: Get \"http://172.23.138.127:8095/api/v1/backup\": dial tcp 172.23.138.127:8095: connectex: No connection could be made because the target machine actively refused it.",

|

"stats": {

|

"started_at": 1619589635472537600,

|

"complete": false

|

}

|

},

|

"error_code": 2

|

}

|

],

|

"error": "exit status 1: failed to execute cluster operations: failed to transfer Analytics metadata: failed to get Analytics metadata: failed to get analytics metadata: failed to execute request: http client failed to dispatch/receive request/response: Get \"http://172.23.138.127:8095/api/v1/backup\": dial tcp 172.23.138.127:8095: connectex: No connection could be made because the target machine actively refused it.",

|

"error_code": 2,

|

"type": "BACKUP",

|

"show": true

|

}

|

Note: Even consecutive backup at start time '2021-04-28T00:00:35-07:00' has failed due to the same reason

Attaching full backup dir data backups.tar.gz as an attachment

Attachments

Issue Links

- duplicates

-

MB-45869 [System Test][Analytics] Rebalance failed with error - java.lang.IllegalStateException: timed out waiting for all nodes to join & cluster active (missing nodes: [xxxx], state: ACTIVE)

-

- Closed

-

-

MB-46782 [BP 6.6.3][System Test][Analytics] Rebalance failed with error - java.lang.IllegalStateException: timed out waiting for all nodes to join & cluster active (missing nodes: [xxxx], state: ACTIVE)

-

- Closed

-