Details

-

Task

-

Resolution: Fixed

-

Critical

-

Cheshire-Cat

-

1

Description

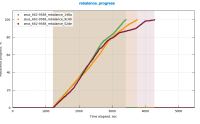

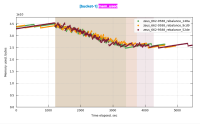

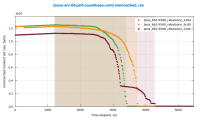

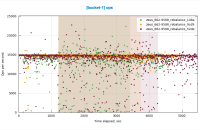

Compared to 6.6.2, windows rebalance tests have higher rebalance time in 7.0.

http://showfast.sc.couchbase.com/#/timeline/Windows/reb/kv/DGM

Rebalance-swap (min), 3 -> 3, 150M x 1KB, 15K ops/sec (90/10 R/W), 10% cache miss rate

| Build | Rebalance Time | Job |

|---|---|---|

| 6.6.2-9588 | 89.7 | http://perf.jenkins.couchbase.com/job/zeus/6578/ |

| 7.0.0-5017 | 97.1 | http://perf.jenkins.couchbase.com/job/zeus/6575/ |

cbmonitor comparison: http://cbmonitor.sc.couchbase.com/reports/html/?snapshot=zeus_662-9588_rebalance_67cc&snapshot=zeus_700-5017_rebalance_d419

Rebalance-out (min), 4 -> 3, 150M x 1KB, 15K ops/sec (90/10 R/W), 10% cache miss rate

| Build | Rebalance Time | Job |

|---|---|---|

| 6.6.2-9588 | 42.1 | http://perf.jenkins.couchbase.com/job/zeus/6577/ |

| 7.0.0-5017 | 54.5 | http://perf.jenkins.couchbase.com/job/zeus/6582/ |

cbmonitor comparison: http://cbmonitor.sc.couchbase.com/reports/html/?snapshot=zeus_662-9588_rebalance_d519&snapshot=zeus_700-5017_rebalance_f2cf