Description

Hi,

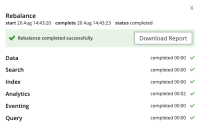

When creating (or upgrading to) an operator deployment using server 7.1.0-1169 (the most recently available docker image), operator repeatedly tries to rebalance because /pools/default responds with "balanced": false (full response attached). However, the server UI reports that the rebalance was successful (image & rebalance report attached).

This happens with a basic operator deployment - no buckets, data, etc. on the cluster, with no interaction from me. I have tested further and can confirm this does not happen doing the same thing (on the same Operator deployment) with server 6.6.3, 7.0.0, 7.0.1, or 7.0.2, leading me to suspect it may be an issue with server.

Attachments

Issue Links

- relates to

-

K8S-2362 Continuous Rebalance on Server 7.1.0

-

- Closed

-