Details

-

Bug

-

Resolution: Fixed

-

Major

-

7.1.0

-

Untriaged

-

1

-

Yes

Description

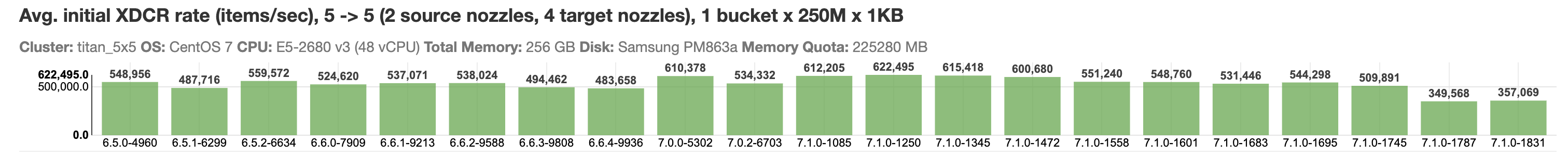

Avg. initial XDCR rate (items/sec), 5 -> 5 (2 source nozzles, 4 target nozzles), 1 bucket x 250M x 1KB

| Build | Avg. initial XDCR rate |

Job |

|---|---|---|

| 7.1.0-1745 | 509,891 | http://perf.jenkins.couchbase.com/job/titan/12655/ |

| 7.1.0-1787 | 349,568 | http://perf.jenkins.couchbase.com/job/titan/12744/ |

| 7.1.0-1831 | 357,069 | http://perf.jenkins.couchbase.com/job/titan/12822/ |

Attachments

Issue Links

- relates to

-

MB-50095 XDCR - p2p - has payloadCompressed but no payload after deserialization

-

- Closed

-