Details

-

Bug

-

Resolution: Unresolved

-

Major

-

6.6.5

-

Untriaged

-

1

-

Unknown

Description

Build : 6.6.5-10068

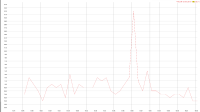

Test : Avg. initial XDCR rate (items/sec), 5 -> 5 (2 source nozzles, 4 target nozzles), 1 bucket x 1G x 1KB, DGM

http://showfast.sc.couchbase.com/#/timeline/Linux/xdcr/init_multi/all

| Build | items/sec |

|---|---|

| 6.6.3-9808 | 502544 |

| 6.6.5-10068 | 445342 451213 |

All other tests look good.

Looks like 6.6.5 may be using a bit more memory.