Details

-

Bug

-

Resolution: Unresolved

-

Major

-

7.1.4, 7.1.0, 7.1.1, 7.1.2, 7.2.0, 7.1.3

-

>= 7.1.x

-

Untriaged

-

0

-

Unknown

Description

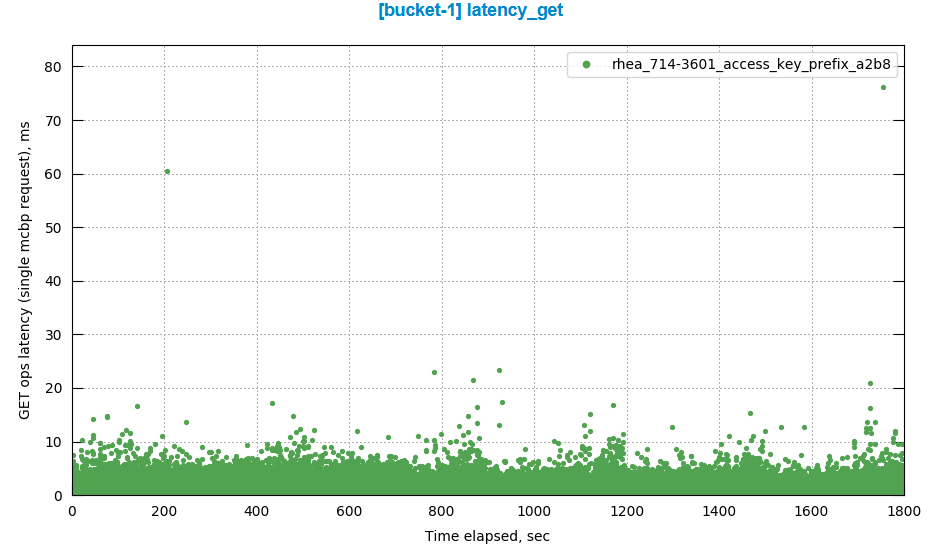

We've noticed that at low resident ratios and high ops/sec (with GETS and SETS), magma compaction seems to impact front-end GET latency (and, although we don't have empirical data for this, it is expected that durable writes with persistence would also be affected).

As far as I can tell, this isn't a regression but something that's been around since Magma was first introduced. It also isn't something that appears to have been getting worse over time.

The way we concluded this is contained within this slack discussion: https://couchbase.slack.com/archives/CF4FTNFEC/p1678898374286419

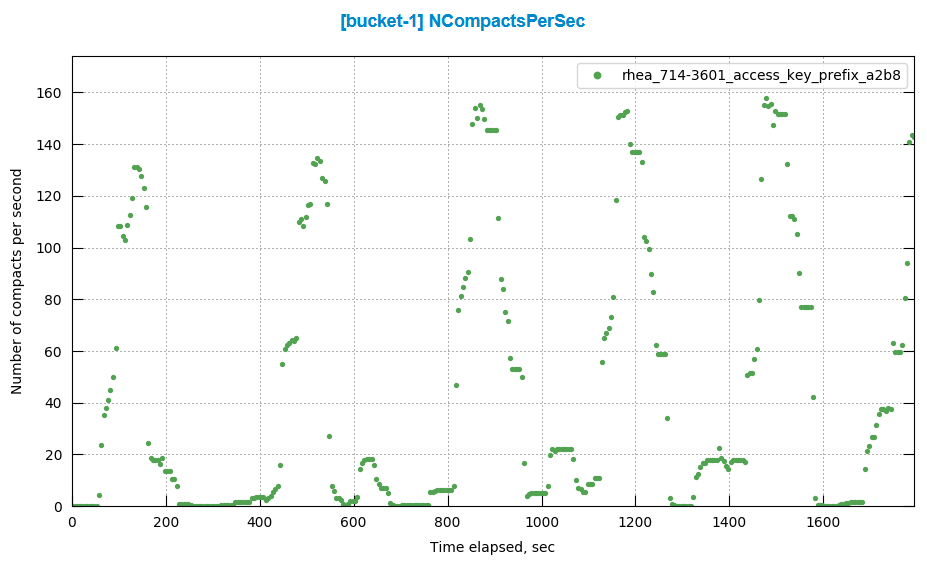

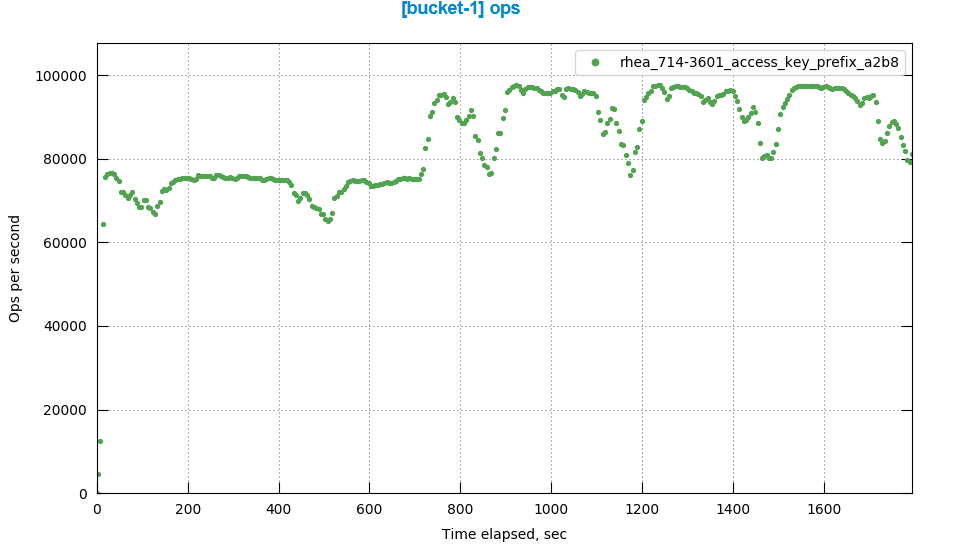

Essentially, I had first notice flusher dedupe spikes which correlated with (and were caused by) disk write queue spikes. These disk write queue spikes in turn looked to be caused by something slowing down writes to disk, which looked like it was compaction (specifically, amount of bytes read/written during compaction). From here we saw that bg_fetch time was also impacted, and from this we verified that front-end get latency was also noticeably impacted (as well as ops/sec, since we use a sequential workload generator).

So far I can only find this behaviour in tests which are at low (single digit) resident ratios and which are pushing high ops/sec, where "high" is relative to the hardware's capabilities. Some examples of tests where we see this kind of behaviour (admittedly its sometimes quite subtle):

- Avg Throughput (ops/sec), Workload A, 3 nodes, 1 bucket x 1.5B x 1KB, 1% Resident Ratio, Magma

- Avg Throughput (ops/sec), 4 nodes, 1 bucket x 1B x 1KB, 90/10 R/W, Uniform distribution, 2% Resident Ratio, Magma

- 99.9th percentile GET Latency(ms), Workload S0.3, 2 nodes, 250M x 1KB, 20K ops/sec (25/50/25 C/R/W), Power distribution (α=10), 2.4% Resident Ratio, Magma

- 99.9th percentile GET Latency(ms), Workload S0.4, 2 nodes, 250M x 1KB, 20K ops/sec (90/10 C/R), Power distribution (α=10), 2.4% Resident Ratio, Magma

I took the cbmonitor images from the report from this 7.1.4 throughput test: http://cbmonitor.sc.couchbase.com/reports/html/?snapshot=rhea_714-3601_access_key_prefix_a2b8

When we compare 7.1.4 to 7.2.0 for that particular test, we see about the same impact in both: http://cbmonitor.sc.couchbase.com/reports/html/?snapshot=rhea_720-5241_access_key_prefix_0d08&snapshot=rhea_714-3601_access_key_prefix_a2b8